GCP – Driving diversity in AI: a new Google for Startup Accelerator for Women Founders from Europe & Israel

Post Content

Read More for the details.

Post Content

Read More for the details.

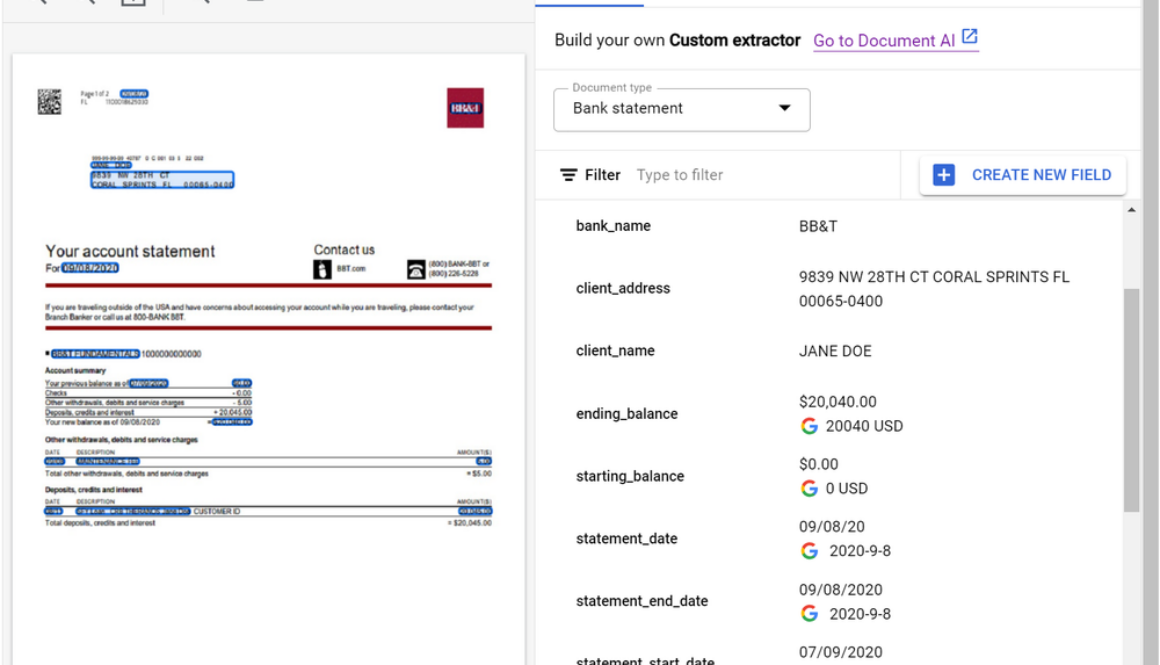

Today, we are excited to announce that the Document AI Custom Extractor, powered by generative AI, is Generally Available (GA), open to all customers, and ready for production use through APIs and the Google Cloud Console. The Custom Extractor, built with Google’s foundation models, helps parse data from structured and unstructured documents quickly and with high accuracy.

In the past, developers trained discrete models by using thousands of samples for each document type and spending a significant amount of time to achieve production-ready accuracy. In contrast, generative AI enables data extraction from a wide array of documents, with orders of magnitude less training data, and in a fraction of the time.

In spite of the benefits of this new technology, implementing foundation models across document processing can be cumbersome. Developers need to manage facets such as converting documents to text, managing document chunks, optimizing extraction prompts, developing datasets, managing model lifecycles, and more.

Custom Extractor, powered by generative AI, helps solve these challenges so developers can create extraction processors faster and more effectively. The new product allows for foundation models to be used out of the box, fine tuned, or used for auto-labeling datasets through a simple journey. Moreover, generative AI predictions are now covered under the Document AI SLA.

The result is a faster and more efficient way for customers and partners to implement generative AI for their document processing workflows. Whether to extract fields from documents with free-form text (such as contracts) or complex layouts (such as invoices or tax forms), customers and partners can now use the power of generative AI at an enterprise-ready level. Developers can simply post a document to an endpoint and get structured data in return with no training required.

During public preview, developers cut time to production, obtained higher accuracies, and unlocked new use cases like extracting data from contracts. Let’s hear directly from a few customers:

“Our partnership with Google Cloud continues to provide innovative solutions for Iron Mountain’s Intelligent Document Processing (IDP) and Workflow Automation capabilities powered by Iron Mountain InSight®. Document AI’s Custom Extractor enables us to leverage the power of generative AI to classify and extract data from unstructured documents in a faster and more effective way. By using this new product and with features such as auto-labeling, we are able to implement document processors in hours vs days or weeks. We are able to then build repeatable solutions, which can be delivered at scale for our customers across many industries and geographies.” – Adam Williams, Vice President, Head of Platforms, Iron Mountain

“Our collaboration with Google marks a transformative leap in the Intelligent Document Processing (IDP) space. By integrating Google Cloud’s Document AI Custom Extractor with Automation Anywhere’s Document Automation and Co-Pilot products, we’re leveraging generative AI to deliver a game-changing solution for our customers. With the integration of the Custom Extractor, we are not just improving document field extraction rates; we are also accelerating deployment time by more than 2x and cutting ongoing system maintenance costs in half. We are excited to partner with Google to shape the next generation of Intelligent Document Processing solutions and revolutionize how organizations automate document-intensive business processes.” – Michael Guidry, Head of Intelligent Document Processing Strategy, Automation Anywhere

In addition, the latest Workbench updates make it even easier to automate document processing:

Price reduction- to better support production workloads, we reduced prices with volume-based tiers for Custom Extractor, Custom Classifier, Custom Splitter, and Form Parser For more information, see Document AI pricing.Fine tuning – Custom Extractor supports fine-tuning (Preview) so you can take accuracy to the next level by customizing foundation model results for your specific documents. Simply confirm extraction results within a dataset and fine-tune with a click of a button or an API call. This feature is currently available in the US region. For more information, see Fine tune and train by document type.Expanded region availability: predictions from the Custom Extractor with generative AI are now available in the EU and northamerica-northeast1 regions. For more information, see Custom Extractor regional availabilty.Version lifecycle management: As Google improves foundation models, older foundation models are deprecated. Similarly, older processor versions will be deprecated 6+ months after new stable versions are released. We are working on an auto-upgrade feature to simplify lifecycle management. For more information, see Managing processor versions.

To quickly see what the Custom Extractor with generative AI can do, check out the updated demo on the Document AI landing page. Simply load a sample document (15 page demo limit). In seconds you will see the power of generative AI extraction as shown below.

If you are a developer, head over to Workbench on the Google Cloud Console to create a new extractor and to manage complex fields or customize foundation models’ predictions for your documents.

Or, to learn more, review documentation for the Custom Extractor with generative AI, review Document AI release notes, or learn more about Document AI and Workbench.

Read More for the details.

BigQuery makes it easy for you to gain insights from your data, regardless of its scale, size or location. BigQuery Data Transfer Service (DTS) is a fully managed service that automates the loading of data into BigQuery from a variety of sources. DTS supports a wide range of data sources, including first party data sources from the Google Marketing Platform (GMP) such as Google Ads, DV360 and SA360 etc. as well as cloud storage providers such as Google Cloud Storage, Amazon S3 and Microsoft Azure.

BigQuery Data Transfer Service offers a number of benefits, including:

Ease of use: DTS is a fully managed service, so you don’t need to worry about managing infrastructure or writing code. DTS can be accessed via UI, API or CLI. You can get started with loading your data in a few clicks within our UI.Scalability: DTS can handle large data volumes and a large number of concurrent users. Today, some of our largest customers move petabytes of data per day using DTS.Security: DTS uses a variety of security features to protect your data, including encryption and authentication. More recently, DTS has added capabilities to support regulated workloads without compromising ease-of-use.

Based on customer feedback, we launched a number of key feature updates in the second half of 2023. These updates include broadening our support for Google’s first party data sources, enhancing the current portfolio of connectors to incorporate customer feedback and improve user experience, platform updates to support regulated workloads, as well as other important security features.

Let’s go over these updates in more detail.

Display and Video Ads 360 (DV360) – A new DTS connector (in preview) allows you to ingest campaign config and reporting data into BigQuery for further analysis. This connector will be beneficial for customers who want to improve their campaign performance, optimize their budgets, and target their audience more effectively.Azure Blob Storage – The new DTS connector for Azure Blob Storage and Azure Data Lake Storage (now GA) completes our support for all the cloud storage providers. This allows customers to automatically transfer data from Azure Blob Storage into BigQuery on a scheduled basis.Search Ads 360 (SA360) – We launched a new DTS connector (in preview) to support the new SA360 API. This will replace the current (and now deprecated) connector for SA360. Customers are invited to try the new connector and prepare for migration (from the current connector). The new connector is built using the latest SA360 API that includes platform enhancements as documented here.

Google Ads: Earlier in 2023, we launched a new connector for Google Ads that incorporated the new Google Ads API. It also added support for Performance Max campaigns.

Recently, we enhanced the connector with a feature (in preview) to enable customers to create custom reports that use a Google Ads Query Language (GAQL) query. Customers have to use their existing GAQL queries on configuring a transfer. With this feature, customers will have an optimized data transfer that pulls in just the data they need and at the same time bring in newer fields that might not be supported in a standard Google Ads transfer.

Additionally, we added support for Manager Accounts (MCC) with a larger number of accounts (8K) for our larger enterprise customers.YouTube Content Owners: Expanded support for YouTube Content Owner by increasing the coverage of CMS reports by adding 27 new financial reports. You can find the details of all the reports supported here.Amazon S3: Enabled hourly ingestion from Amazon S3 (vs. daily ingestion previously) to improve data freshness. This has been a consistent customer requested feature to improve the freshness of data from S3.

Your data is only useful if you can access it in a trusted and safe way. This is particularly important for customers running regulated workloads. To that end, we enabled:

Customer-managed encryption keys (CMEK) support for scheduled queries, Cloud Storage transfers, and Azure Blob Storage transfersService Account support for additional data sources: Campaign Manager, Teradata, Google Play, Amazon Redshift, Search Ads 360, and Display & Video 360.Data residency and access transparency controls for scheduled queries and Cloud Storage transfers.Enabled support for Workforce Identity Federation for all data sources except for YouTube Channel Transfers.

As we look to 2024, we are excited to continue this rapid innovation with new connectors for marketing analytics, operational databases and enterprise applications. At the same time, we look to evolve our portfolio of connectors with capabilities that improve the “freshness” of data, and help customers with AI-assisted transformations.

Try BigQuery Data Transfer Service today and streamline loading your data into BigQuery!

Read More for the details.

BigQuery is a powerful and scalable petabyte-scale data warehouse known for its efficient SQL query capabilities, and is widely adopted by organizations worldwide.

While BigQuery offers exceptional performance, cost optimization remains critical for our customers. Together, Google Cloud and Deloitte have extensive experience assisting clients in optimizing BigQuery costs. In a previous blog post, we discussed how to reduce and optimize physical storage costs when implementing BigQuery. This blog post focuses on optimizing BigQuery computational costs through the utilization of the newly introduced BigQuery editions instead of the on-demand ($/TB) pricing model.

Selecting BigQuery editions slots with autoscaling is a compelling option for optimizing costs.

Many organizations select BigQuery’s on-demand pricing model for their workloads due to its simplicity and pay-per-query nature. However, the computational query analysis costs can be significant. Minimizing expenses associated with computational analysis is a prominent issue for some of our clients.

Deloitte helped clients to address the following challenges:

Conducting a proof of concept to compare BigQuery editions with on-demand costsWhere to manage BigQuery editions slotsHow to charge back to different departmentsWhich criteria to use to group projects into reservationsHow to share idle slots from one reservation to others to reduce wasteHow many slots to commit for maximum ROI

Read on to learn how to address the above mentioned challenges as you work to optimize costs on Google Cloud with BigQuery.

First, if you are not familiar with BigQuery editions, we recommend that you readintroduction to BigQuery editions andintroduction to slots autoscaling.

Then, let’s understand the key differences between on-demand and BigQuery editions. In the on-demand pricing model, each project can scale up to 2,000 slots for analysis. The price is based on the number of bytes scanned multiplied by the unit price, independent of the slot capacity used.

On the other hand, BigQuery editions are billed based on slot hours. BigQuery editions allows for autoscaling, meaning it can scale up to the maximum number of slots defined and scale down to zero once computational analysis jobs are finished. Note that there is a one-minute scale-down window.

If you have workloads that require more than 2,000 slots per project available in on-demand, you should use BigQuery editions with higher capacity requirements. Additionally, if you’re using Enterprise or Enterprise Plus editions, you can assign baseline slots for workloads that are sensitive to “cold start” issues, ensuring consistent performance. Finally, you have the option to make a one- or three-year slot commitment to lower the unit price by 20% or 40%, respectively. Note: baseline and committed slots are charged 24/7, regardless of job activity.

Before creating a reservation, it’s essential to establish a BigQuery admin project within your organization dedicated solely to administering slots commitments and reservations. This project should not have any other workloads running inside it. By doing so, all slots charges are centralized in this project, streamlining administration.

There is value to managing all reservations in a single BigQuery admin project since idle and unallocated slots are only shared among reservations within the same administration project. This practice ensures efficient slot utilization and is considered a best practice.

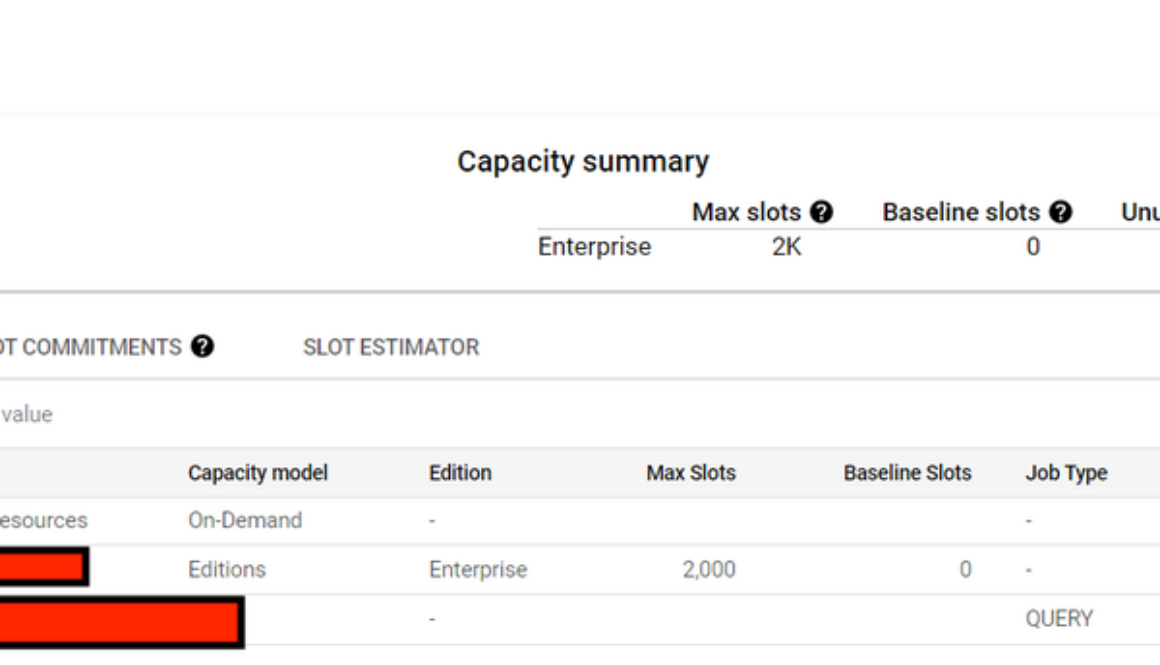

To determine whether BigQuery editions slots are more cost-effective than on-demand for your workload, conduct a proof of concept by selecting a project with high on-demand query consumption and try out the BigQuery editions slots model. First, create a reservation within the BigQuery admin project and assign a project to it. Start with 2,000 slots as the maximum reservation size, equivalent to the current on-demand capacity.

You can then use the BigQuery administrative charts to determine slot cost. Additionally, you can run a query using the JOBS information schema to find out how many bytes have been scanned in the project to calculate the cost with the “on-demand” pricing model.

The following picture depicts a BigQuery slots reservation using Enterprise edition, with 2,000 max slots and 0 baseline slots without any commitment. Only one project has been assigned into this reservation to conduct a proof of concept:

Figure 1: Reservation with baseline and autoscaling slots.

Based on our experience, we saw huge benefits to leverage slots in our case. However, you must perform your own assessment as both slot-based and on-demand models offer value depending on your specific query requirements. For projects with minimal traffic volume and straightforward management needs, where most jobs or queries complete within seconds and data scans are limited, the on-demand model might be a more suitable choice.

Figure 2 and Figure 3 shows this comparison when using the BigQuery editions slot-cost model versus the on-demand model.

Figure 2: BigQuery editions slots costs.

From Figure 2, we see that the BigQuery editions slot cost is $1,641.

Figure 3: What-if using BigQuery on-demand costs.

Figure 3 shows a cost of $4,952 for the same time period when using BigQuery on-demand cost.

Figure 4 shows that the initial autoscaling slot size we used was too large. In Figure 7, you will see the recommended slot size from the slot estimator to allow you to reset the slot size for cost optimization.

Figure 4: The maximum number of auto-scaling slots is too large.

In the BigQuery editions slots model, all slot costs are recorded in the central BigQuery admin project. Chargeback to different departments becomes a crucial consideration when there are requirements to charge different departments or teams for resources consumed for billing and accounting purposes.

An upcoming BigQuery slot allocation billing report has lines for each reservation’s cost for each assigned query project. To facilitate chargeback, we recommend grouping projects based on their cost center, allowing for easy allocation. Until this new feature is available, you can run queries based on the BigQuery information schema to determine each project’s slot hours usage for chargeback purposes.

To optimize slot usage, consider grouping projects based on different workload types, such as Business Intelligence (BI), standard Extraction Transformation Loading (ETL), and data science projects. Each reservation can have unique characteristics shared by the group, defining baseline and maximum slots requirements. Grouping projects by cost center is an approach for efficient chargeback, with each cost center belonging to different departments (e.g., BI, ETL, data science).

Idle slots can be shared to avoid waste. By default, queries running in a reservation automatically use baseline idle slots from other reservations within the same administration project. Autoscaling slots are not considered idle capacity, as they are removed when they are no longer needed. Idle capacity is preemptible back to the original reservation as needed, irrespective of the query’s priority. This automatic and real-time sharing of idle slots helps to ensure optimal utilization.

Inintroduction to slots autoscaling, this picture explains a reservation with idle slots sharing;

Figure 5: Reservation with baseline, autoscaling slots and idle slots sharing.

Reservations use and add slots in the following priority:

Baseline slotsIdle slot sharing (if enabled)Autoscale slots

For the ETL reservation, the maximum number of slots possible is the sum of the ETL baseline slots (700) and the dashboard baseline slots (300, if all slots are idle), along with the maximum number of auto scale slots (600). Therefore, the ETL reservation in this example could utilize a maximum of 1600 slots.

By committing to a one- or three-year plan, you can get a 20% or 40% discount on pay-as-you-go slots (PAYG). However, if your workloads mainly consist of scheduled jobs and are not always running, you might end up paying for idle slots 24/7. To find the best reservation settings, you can use the slot estimator tool to analyze your usage patterns and gain insights. The tool suggests the optimal commitment level based on your usage. It uses a simulation starting with 100 slots as a unit to find the best ROI for your commitment level. The screenshot below shows an example of the tool.

Figure 6: Slot estimator for optimal cost settings.

Presently, the Google Cloud console also provides recommendations onorganization-level BigQuery editions in a dashboard, enabling you to gain a comprehensive overview of the entire system.

Figure 7 BigQuery editions Recommender Dashboard

Additionally, optimizing slots usage together with a 3-year commitment can further reduce costs.

Transitioning from on-demand ($/TB) to slots using BigQuery editions presents a significant opportunity for reducing analytics costs. By following the step-by-step guidance on conducting a proof of concept and transitioning to the BigQuery editions slots model, organizations can maximize their cost optimization efforts. We wish you a productive and successful cost optimization journey as you build with BigQuery! As always, reach out to us for support here.

Read More for the details.

The recent growth in distributed, compute-intensive ML applications has prompted data scientists and ML practitioners to find easy ways to prototype and develop their ML models. Running your Jupyter notebooks and JupyterHub on Google Kubernetes Engine (GKE) can provide a way to run your solution with security and scalability built-in as core elements of the platform.

GKE is a managed container orchestration service that provides a scalable and flexible platform for deploying and managing containerized applications. GKE abstracts away the underlying infrastructure, making it easy to deploy and manage complex deployments.

Jupyterhub is a powerful, multi-tenant server-based web application that allows users to interact with and collaborate on Jupyter notebooks. Users can create custom computing environments with custom images and computational resources in which to run their notebooks. “Zero to Jupyterhub for Kubernetes” (z2jh) is a Helm chart that you can use to install Jupyterhub on Kubernetes that provides numerous configurations for complex user scenarios.

We are excited to announce a solution template that will help you get started with Jupyterhub on GKE. This greatly simplifies the use of z2jh with GKE templates, offering a quick and easy way to set up Jupyterhub by providing a pre-configured GKE cluster, Jupyterhub config, and custom features. Further, we added features such as authentication and persistent storage and cut down the complexity for model prototyping and experimentation. In this blog post, we discuss the solution template, the Jupyterhub on GKE experience, unique characteristics that come from running on GKE, and features such as a custom authentication and persistent storage.

Running Zero to Jupyterhub on GKE provides a powerful platform for ML applications but the installation process is complicated. To ensure ML practitioners have minimal friction, our solution templates abstract away the infrastructure setup and solve common enterprise platform challenges including authentication and security, and persistent storage for notebooks.

Security and Auth

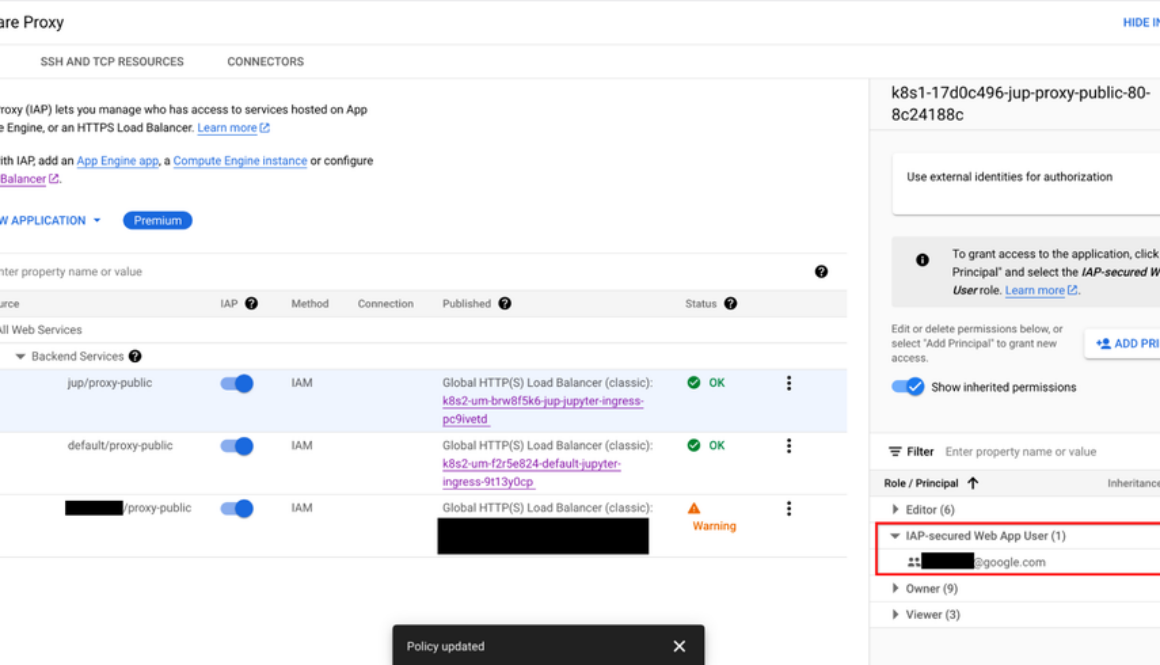

Granting the correct access to the notebooks can be especially difficult when working with sensitive data. By default, Jupyterhub exposes a public endpoint that anyone can access. This endpoint should be locked down to prevent unintended access. Our solution leverages Identity-Aware Proxy (IAP) to gate access to the public endpoint. IAP creates a central authorization layer for the Jupyterhub application access by HTTPS, utilizing the application-level access model and enabling IAM-based access control to the notebook to make users’ data more secure. Adding authentication to Jupyterhub ensures user validity and notebook security.

By default, the template reserves an IP address through Google Cloud IAP. Platform administrators can alternatively provide a domain to host their Jupyterhub endpoint, which will be guarded by IAP. Once IAP is configured, the platform administrator must update the service allowlist by granting users the role of “IAP-secure Web App User.” You can see how to allow access to the deployed Jupyterhub in the image below and as described here:

Now when a user navigates to the Jupyterhub endpoint gated behind IAP, they are presented with a Google login screen (shown below) to log in with their Google identity.

Persistent storage

Running Jupyterhub on GKE does not come with an out-of-the-box persistent storage solution, so notebooks are lost when the clusters are deleted. To persist notebook data, our templates provide options to integrate with Google storage solutions like Filestore, GCSFuse, andCompute Engine Persistent Disk. Each of these offer different features for different use cases:

Filestore – Supports dynamic provisioning and standard POSIX. Although the persistent volumes come with a minimum size of 1Ti for the standard tier, they provide multishare support to optimize costs.GCSFuse – Uses Cloud Storage buckets as the persistent volume but requires manual bucket creation i.e., the platform engineer must provision a bucket for each user. Cloud Storage can be managed via the UI support in the Google Cloud console and access control can be configured via IAM.Compute Engine Persistent Disk – Supports dynamic provisioning and can automatically scale while supporting different disk types.

To learn more about storage solutions, check out this guide.

The solution template uses Terraform with Helm charts to provision JupyterHub. Follow the step-by-step instructions in the README file to get started. The solution contains two groups of resources: platform-level and jupyterhub-level.

Platform-level resources are expected to be deployed once for each development environment by the system administrator. This includes common infrastructure and Google Cloud service integrations that are shared by all users. System administrators can also reuse already deployed development environments as well.

GKE Cluster and node pool – Configured in the main.tf file, this module deploys a GKE cluster with a GPU node pool. GKE also provides alternative GPU and machine types.Kubernetes System namespaces and service accounts, along with necessary IAM policy bindings.

The following resources are created when the system admins install Jupyterhub on the cluster. System administrators will be required to reinstall to apply any changes made to Jupyterhub configuration, i.e., the changes listed here.

Jupyterhub z2jh server – Spins up Jupyter notebook environments for users.IAP-related k8s deployments – This includes the Ingress, Backend Configuration, and Managed Certificate that integrates Google Cloud IAP with JupyterhubDepending on the user’s choice, storage volumes will be created by Filestore, GCSFuse, or Persistent Disk.

GKE’s flexible container customization and nodepool configurations work well with Jupyter’s concept of notebook profiles. Jupyterhub configuration offers a customizable number of preset profiles with predetermined Jupyter notebook images, memory, CPUs, GPUs, and many more. Using profiles, engineers can leverage GKE infrastructure like GPUs and TPUs to run their notebooks.

The combination of Jupyter and GKE offers a powerful yet simple solution for building, running, and managing AI workloads. Jupyterhub’s ease-of-use makes it a popular choice for machine learning models and data exploration. With GKE, Jupyterhub can go further by becoming more scalable and reliable.

You can also learn about running Jupyterhub with Ray here.

If you have any questions about using Jupyterhub with GKE, please raise an issue on our Github. Learn more about building AI Platforms with GKE by visiting our User Guide.

Read More for the details.

Students pursuing undergraduate degrees in engineering, computer science, IT, and other related fields: Are you curious about how to apply training and certifications to your real-life, day-to-day experience, or to upskill your technical capabilities? If so, this blog is for you.

In September and October 2023, approximately 100k students from 700 educational campuses in India completed the Google Cloud Computing Foundations course, learning generative AI skills that they can use on the job.

These students completed the course with help from learning content provided by Google Cloud Study Jams, community-led study groups run by Google Developer Student Clubs (GDSC). These study groups help aspiring developers get hands-on training in Google Cloud technologies, from containerizing applications to data engineering to machine learning and AI. They are set up as study parties, for a range of cloud topics and skill levels, and they can be tailored to group needs.

The study jam events in India had a significant impact for student learners and facilitators:

Students learned key foundational and technical skills to prepare them for their future careers.Student facilitators had the opportunity to gain a relevant Google Cloud certification.Nominated student facilitators gained leadership experience amongst their peers.

The Google Developer Student Clubs team in India started by reaching out to Google Cloud Developer Advocate Romin Irani to train student facilitators nominated from each GDSC educational institution. This train-the-trainer program focused on generative AI and cloud computing basics, and ran as a series of live sessions every week. The facilitators were then ready to manage the learning experience for students at their campuses.

In turn, student facilitators trained their students using learning material that is available to anyone, at any time. We encourage you to go check it out yourself. Materials included:

Google Cloud Computing Foundations: Cloud Computing Fundamentals – Comprised of videos, labs and quizzes, this introductory content helps learners gain hands-on skills in Google Cloud infrastructure, networking, security, data, and AI and ML.The Arcade – This no-cost gamified learning experience offers gen AI technical labs and the opportunity to earn swag. The games change from month to month, and must be completed within the month. The students in India worked on prompt engineering games.

Facilitators chose how they wanted to train their students. Some facilitators let students go through the training independently, acting merely as a point person for troubleshooting. Some taught the content digitally, and some in-person. Many facilitators took a hybrid approach.

Here’s what Himanshu Jagdale had to say about the facilitator experience:

“Being a Cloud Facilitator in Google Cloud Study Jams was a game-changer for my certification journey. It not only deepened my understanding of Google Cloud, but also allowed me to share knowledge, and collaborate with other facilitators who really helped me during my Associate Cloud Engineer certification. The hands-on labs and expert sessions by Romin Irani enriched my practical skills, making a significant contribution to my success in achieving the Google Cloud Associate Cloud Engineer certification.”

Participant Adarsh Rawat said:

“The Google Cloud Study Jam program was immensely beneficial to me. The hands-on labs and modules assisted me in building concepts and covering the certification syllabus. Honestly, I am a big fan of labs, as they allow for the coverage of most vital topics at once.”

If you’re a student with a passion for technology, we invite you to join a Google Developer Student Club near you — there are over 2100 Google Developer Student Clubs around the world, and joining one can help you learn, grow, and build a network of like-minded individuals, as well as find out about upcoming study jam opportunities. (If you’re not a student but looking to join a local community to stay connected and grow your skills, be sure to check out your local Google Developer Group.)

You can continue upskilling on Google Cloud by playing The Arcade at no-cost, with new games published regularly. Not only does The Arcade help you learn new hands-on technical skills, it also lets you gain points to use towards cool Google Cloud swag.

You can also visit Google Cloud Skills Boost for on-demand, role-based training to help you build your skills and validate your knowledge, any time, anywhere. You’ll find a variety of training options for in-demand job roles, like data analyst, security engineer, cloud engineer, machine learning engineer, and more, at levels ranging from introductory to advanced.

Start 2024 strong by upskilling with Google Cloud.

Read More for the details.

As Kubernetes adoption grows, so do the challenges of managing costs for medium and large-scale environments. The State of Kubernetes Cost Optimization report highlights a compelling trend: “Elite performers take advantage of cloud discounts 16.2x more than Low performers.”

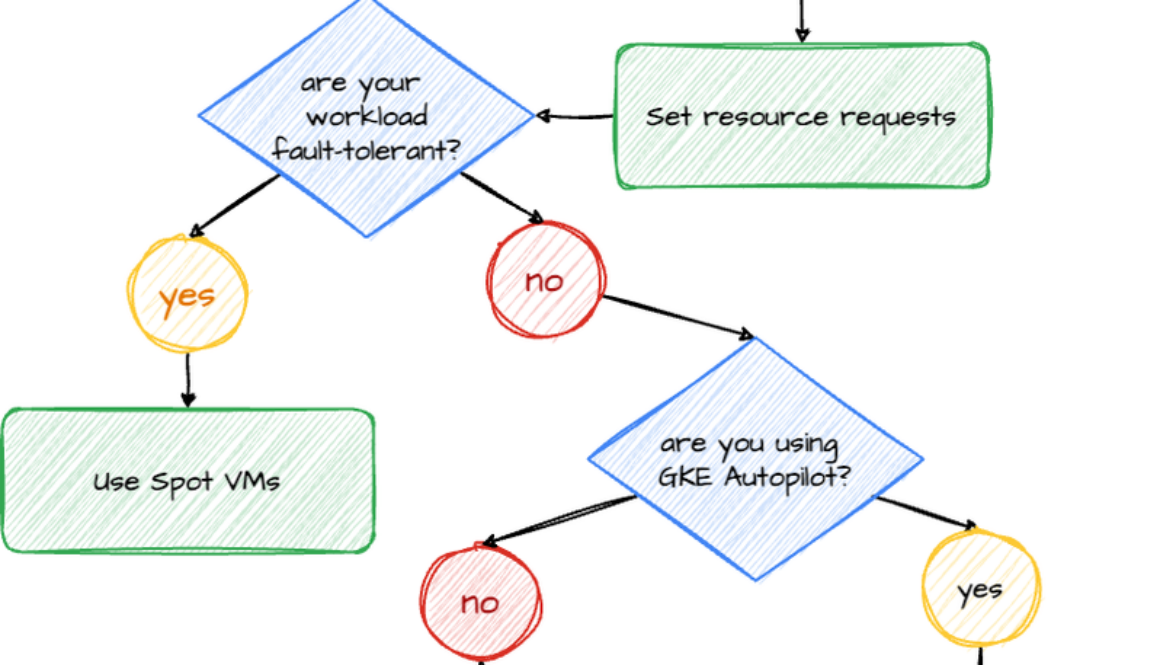

There are probably lots of reasons for this, but a couple might be these teams’ in-house expertise, proficiency in overseeing large clusters, and targeted strategies that prioritize cost-efficiencies, including Spot VMs and committed use discounts (CUD). In this blog post, we list the best practices you can follow to create a cost-effective environment on Google Kubernetes Engine (GKE), and an overview of the top various cloud discounts available to GKE users.

Before selecting a cloud discount model to apply to your GKE environment, you need to know how much computing power your applications use, or you may end up overestimating your resource needs. You can do this by setting resource requests and rightsizing your workloads, helping to reduce costs and improve reliability. Alternatively, you can also create a VerticalPodAutoscaler (VPA) object to automate the analysis and adjustment of CPU and memory resources for Pods. And be sure to understand how VPA works before enabling it — it can either provide recommended resource values for manual updates or be configured to automatically update those values for your Pods.

GKE Autopilot shifts the mental model of Kubernetes cost optimization, in that you only pay for what resources your Pods request. But did you know that you can still take advantage of committed use on a per-Pod level? Reduce your GKE Autopilot costs with Kubernetes Engine (Autopilot Mode) committed use discounts. Autopilot Mode CUDs, which are based on one- and three-year commitments, can help you save 20% and 45% off on-demand prices, respectively. These discounts are measured in dollars per hour of equivalent on-demand spend. However, they do not cover GKE Standard, Spot pods, or management fees.

Flexible CUDs add flexibility to your spending capabilities by eliminating the need to restrict your commitments to a single project, region, or machine series. With Flexible CUDs, you can see a 28% discount over your committed hourly spend amount for a one-year commitment and 46% for a three-year commitment. With these spend-based commits, you can use vCPUs and/or memory in any of the projects within a Cloud Billing account, across any region, and that belong to any eligible general-purpose and/or compute-optimized machine type.

For GKE Standard, resources-based CUDs offer up to 37% off the on-demand prices for a one-year commitment, and up to 70% for a three-year commitment for memory-optimized workloads. GKE Standard CUDs cover only memory and vCPUs, and GPU commitments are subject to availability constraints. To guarantee that the hardware in your commit is available, we recommend you purchase commitments with attached reservations.

Here’s a bold statement: Spot VMs can reduce your compute costs by up to 91%. They offer the same machine types, options, and performance as regular compute instances. But the preemptible nature of Spot VMs means that they can be terminated at any time. Therefore, they’re ideal for stateless, short-running batch jobs, or fault-tolerant workloads. If your application exhibits fault tolerance (meaning it can shut down gracefully within 15 seconds and is resilient to possible instance preemptions), then Spot instances can significantly reduce your costs.

CUDs, meanwhile, also offer substantial cost savings for businesses that leverage cloud services. To maximize these savings, be sure to allocate resources strategically, ensure that workloads are appropriately sized, and employ optimization tools to assist in sizing your CUDs. By allocating resources efficiently, you can avoid unnecessary costs while maintaining consistent application performance. Adhere to the guidelines in this article to enjoy notable savings on your cloud services.

To determine when to use Spot VMs and when to choose CUD, check out the diagram below.

The State of Kubernetes Cost Optimization report talks about all these techniques in depth. Get your copy now, and now and dive deeper into the insights by exploring the series of blogs derived from this report:

Setting resource requests: the key to Kubernetes cost optimizationMaximizing Reliability, Minimizing Costs: Right-Sizing Kubernetes WorkloadsHow not to sacrifice user experience in pursuit of Kubernetes cost optimizationImprove Kubernetes cost and reliability with the new Policy Controller policy bundle

Read More for the details.

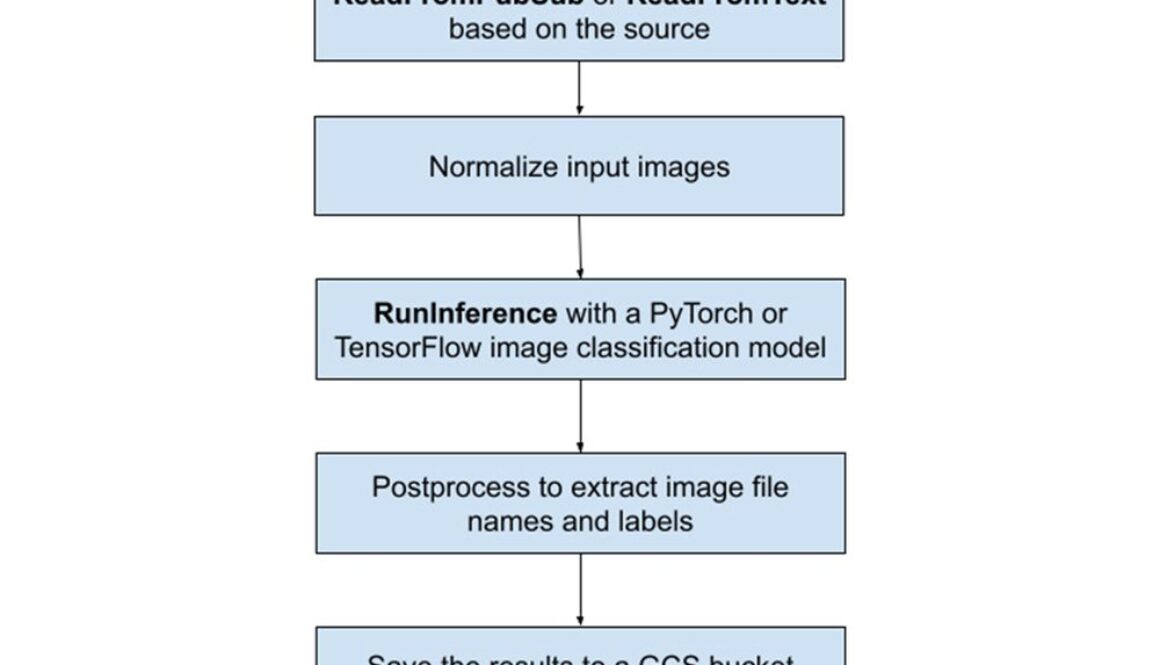

As part of BigQuery’s integrated suite of capabilities; Dataflow ML enables scalable local and remote inference with batch and streaming pipelines, as well as facilitating data preparation for model training and processing the results of model predictions. Our new Dataflow ML Starter project provides all of the essential scaffolding and boilerplate required to quickly and easily create and launch a Beam pipeline. In detail, the Dataflow ML Starter project contains a basic Beam RunInference pipeline that deploys some image classification models to classify the given images. As shown in Figure 1, The pipeline either reads the Cloud Storage (GCS) file that contains image GCS paths or subscribes a Pub/Sub source to receive image GCS paths, pre-processes the input images, runs a PyTorch or TensorFlow image classification model, post-processes the results, and finally writes all predictions back to the GCS output file.

The project illustrates the entire Dataflow ML development process by walking the user through each step, including:

Developing the Beam pipeline with a local Python environment and creating unit tests to validate the pipelineRunning the Beam RunInference job using DataflowRunner with CPUsImproving the inference speed and using GPUs, building and testing a custom container using GCE VMs and providing some Dockerfile samplesDemonstrating how to use Pub/Sub as the streaming source to classify imagesDemonstrating how to package all the code and apply a Dataflow Flex Template

In summary, the project produces a standard template that serves as a boilerplate, which can be easily modified to suit your specific needs.

To get started, visit the GitHub repository and follow the instructions. We believe that this starter project will be a valuable resource for anyone working with Dataflow ML. We are delighted to share our knowledge with the community and anticipate how it will help developers and data engineers achieve their goals. Please do not forget to star it if you find it helpful!

Read More for the details.

Data is increasingly valuable, powering critical dashboards, machine learning applications, and even large language models (LLMs). Conversely, that means every minute of data downtime — the period of time data is wrong, incomplete, or inaccessible — is more and more costly. For example, a data pipeline failure at a digital advertising platform company could result in hundreds of thousands in lost revenue .

Unfortunately, it is impossible to anticipate all the ways data can break with testing, and attempting to maintain a view of inconsistencies across your entire environment would be incredibly time-consuming.

Monte Carlo, a data observability software provider, together with Google Cloud can significantly minimize data downtime by utilizing cutting-edge Google Cloud services for ETL, data warehousing, and data analytics. Combined with the robust capabilities of Monte Carlo’s data observability, you can better detect, resolve, and prevent data incidents on a large scale.

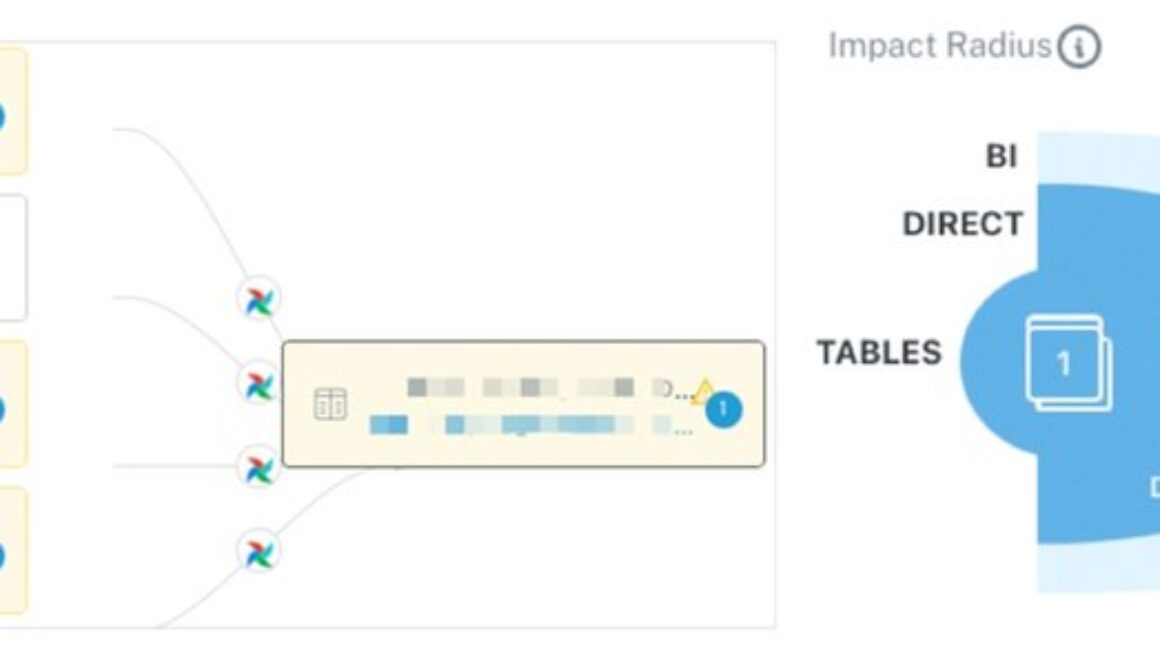

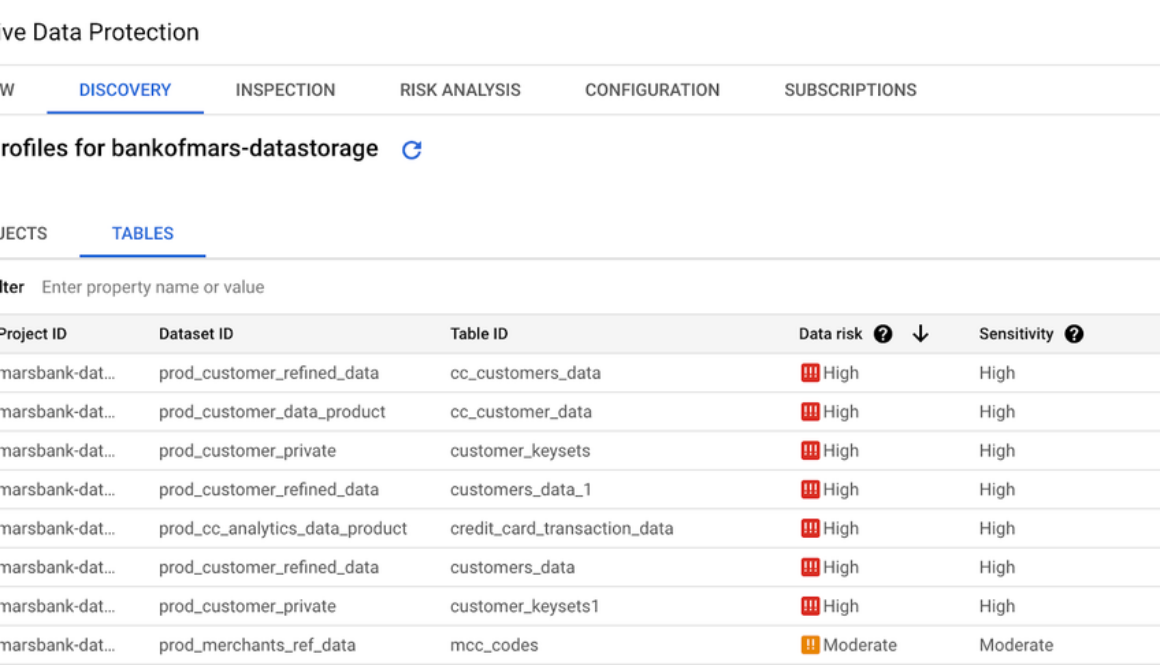

Monte Carlo’s data lineage shows the assets upstream with anomalies that may be related to the volume incident, while Impact Radius shows who will be affected to help inform smart triaging.

This is all enabled by the metadata, access to query logs, and other BigQuery features that help structure your data, as well as the APIs provided by Looker.

This reference architecture enables these key outcomes:

1. Mitigate the risk and impact of bad data: Reducing the number of incidents and improving time-to-resolution lowers the likelihood that bad data will cause negative reputational, competitive, and financial outcomes.

2. Increase data adoption, trust, and collaboration: Catching incidents first, and proactively communicating during the incident management process, helps build data trust and adoption. Data quality monitors and dashboards are the enforcement and visibility mechanisms required for creating effective, proactive data SLAs.

3. Reduce the time and resources spent on data quality: Studies show data teams average 30% or more of their workweek on data quality and other maintenance related tasks rather than tasks to unlock additional value from data and data infrastructure investments. Data observability reduces the amount of time data teams need to scale their data quality monitoring as well as resolving incidents.

4. Optimize the performance and cost of data products: When data teams move fast, they build “pipeline debt” over time. Slow-running data pipelines utilize excess compute, cause data quality issues, and create a poor user experience for data consumers, who must wait for data to return, dashboards to load, and AI models to update.

Monte Carlo recently expanded to a hybrid-SaaS offering using native Google Cloud technologies. The following diagram shows a Google-Cloud-hosted agent and datastore architecture for connecting BigQuery, Looker, and other data pipeline solutions to your Monte Carlo platform.

Additional architecture options include deployments where:

the MC agent is hosted within the Monte Carlo cloud environment and object storage remains as a Google Cloud Storage bucketboth the MC agent and object storage are hosted within the MC cloud environment

These deployment options help you choose how much control you want over your connection to the MC service as well as how you want to manage the agent/collector infrastructure.

The Google-Cloud-hosted agent and datastore option provides several capabilities, built on the following components:

Process and enrich data in BigQuery – BigQuery is a serverless and cost-effective enterprise data platform. Its architecture lets you use SQL language to query and enrich enterprise-scale data. And its scalable, distributed analysis engine lets you query terabytes in seconds and petabytes in minutes. Integrated ML and support for BI Engine lets you easily analyze the data and gain business insights.Visualize data and insights in Looker – Looker is a comprehensive business intelligence tool that consolidates your data via integration with numerous data sources. Looker lets users craft and personalize dashboards automatically, turning data into significant business metrics and dimensions. Linking Looker with BigQuery is straightforward, as users can add BigQuery projects and specific datasets directly as Looker data sources.Deploy the Monte Carlo agent and object storage – Monte Carlo uses an agent to connect to data warehouses, data lakes, BI and other ETL tools in order to extract metadata, logs and statistics. No record-level data is collected by the agent. However, there are times when Monte Carlo customers may want to sample a small subset of individual records within the platform as part of their troubleshooting or root-cause analysis process. Perhaps you need this type of sampling data to persist within your clouds, which can be done via dedicated object storage in Google Cloud Storage. To deploy the agent in your Google Cloud environment, you can access the appropriate infrastructure wrapper on the Terraform Registry. This launches a DockerHub image to Cloud Run for the agent and a Cloud Storage bucket for sampling data. The agent has a stable HTTPS endpoint that accesses the public internet and authorizes via Cloud IAM.Deploy object storage for Monte Carlo sampling data – There are times when Monte Carlo customers may want to sample a small subset of individual records within the platform for troubleshooting or to perform root-cause analysis process. They may have a desire or requirement for this type of sampling data to persist within their clouds, whether or not they choose to deploy and manage the Monte Carlo agent. Users can find the appropriate infrastructure wrapper available on the Terraform Registry (GitHub repository) that will generate the resourcesIntegrate Monte Carlo and BigQuery – Once the agent is deployed and you’ve established connectivity, you create a read-only service account with the appropriate permissions and provide the service credentials via the Monte Carlo onboarding wizard (details for BigQuery setup here). By parsing the metadata and query logs in BigQuery, Monte Carlo can automatically detect incidents and display end-to-end data lineage, all within days of deployment, without any additional configuration.Integrate Monte Carlo and Looker – You can also easily integrate Looker and Looker Git (formerly LookML code repository), which will allow Monte Carlo to map dependencies between Looker objects and other components of your modern data stack. This can be done by creating an API key on Looker that allows Monte Carlo to access metadata on your Dashboards, Looks, and other Looker Objects. You can then connect via private/public keys, which provides more granular control and connectivity, or HTTPS, which is recommended if you have many repos to connect to MC.Integrate Monte Carlo with Cloud Composer and Cloud Dataplex – The Monte Carlo agent can be effectively integrated with both Cloud Composer and Cloud Dataplex to enhance data reliability and observability across your Google Cloud data ecosystem. By integrating Monte Carlo with Cloud Composer and Cloud Dataplex, you can ensure enhanced data observability, quicker identification of data incidents, and more efficient root-cause analysis. This integration empowers teams to maintain high data quality and reliability across complex, multi-faceted data environments within Google Cloud.Integrate Monte Carlo and other ETL tools – Organizations’ data platforms often consist of multiple solutions to manage the data lifecycle — from ingestion, orchestration, and transformation, to discovery/access, visualization, and more. Depending on their size, some organizations may even use multiple solutions within the same category. For example, in addition to BigQuery, some organizations store and process data within other ETL tools powered by Google Cloud. Most of these integrations require a simple API key or service account to connect them to your Google-Cloud-hosted Monte Carlo agent. For more details on a specific integration, refer to Monte Carlo’s documentation.

In conclusion, deploying data observability with Monte Carlo and Google Cloud offers an invaluable solution to the increasingly critical issue of data downtime. By leveraging advanced Google Cloud services and Monte Carlo’s observability capabilities, organizations can not only mitigate risks associated with bad data but also enhance trust, collaboration, and efficiency across their data landscape. As we’ve explored, the integration of tools like BigQuery and Looker with Monte Carlo’s architecture creates a powerful synergy, optimizing data quality and performance while reducing the time and resources spent on data maintenance.

If you’re looking to elevate your organization’s data management strategies and minimize data downtime, consider exploring the integration of Monte Carlo with your Google Cloud environment. Start by evaluating your current data setup and identifying areas where Monte Carlo’s observability can bring immediate improvements. Remember, in the world of data, proactive management is key to unlocking its full potential.

Ready to take the next step? Reach out to the Monte Carlo or Google Cloud team today to begin your journey towards enhanced data observability and reliability. Let’s transform the way your organization handles data!

Read More for the details.

Businesses generate massive amounts of speech data every day, from customer calls to product demos to sales pitches. This data can transform your business by improving customer satisfaction, helping you prioritize product improvements and streamline business processes. While AI models have improved in the past few months, connecting speech data to these models in a scalable and governed way can be a challenge, and can limit the ability of customers to gain insights at scale.

Today, we are excited to announce the preview of Vertex AI transcription models in BigQuery. This new capability can make it easy to transcribe speech files and combine them with other structured data to build analytics and AI use cases — all through the simplicity and power of SQL, while providing built-in security and governance. Using Vertex AI capabilities, you can also tune transcription models to your data and use them from BigQuery.

Previously, customers built separate AI pipelines for transcription of speech data for developing analytics. These pipelines were siloed from BigQuery, and customers wrote custom infrastructure to bring the transcribed data to BigQuery for analysis. This helped to increase time to value, made governance challenging, and required teams to manage multiple systems for a given use case.

Google Cloud’s Speech to Text V2 API offers customers a variety of features to make transcription easy and efficient. One of these features is the ability to choose a specific domain model for transcription. This means that you can choose a model that is optimized for the type of audio you are transcribing, such as customer service calls, medical recordings, or universal speech. In addition to choosing a specialized model, you also have the flexibility to tune the model for your own data using model adaptation. This can allow you to improve the accuracy of transcriptions for your specific use case.

Once you’ve chosen a model, you can create object tables in BigQuery that map to the speech files stored in Cloud Storage. Object tables provide fine-grained access control, so users can only generate transcriptions for the speech files for which they are given access. Administrators can define row-level access policies on object tables and secure access to the underlying objects.

To generate transcriptions, simply register your off-the-shelf or adapted transcription model in BigQuery and invoke it over the object table using SQL. The transcriptions are returned as a text column in the BigQuery table. This process makes it easy to transcribe large volumes of audio data without having to worry about the underlying infrastructure. Additionally, the fine-grained access control provided by object tables ensures that customer data is secure.

Here is an example of how to use the Speech to Text V2 API with BigQuery:

This query generates transcriptions for all of the speech files in the object table and returns the results as a new text column named transcription.

Once you’ve transcribed the speech to text, there are three ways you can build analytics on the resulting text data:

Use BigQueryML to perform commonly used natural language use cases: BigQueryML provides wide running support to train and deploy text models. For example, you can use BigQuery ML to identify customer sentiment in support calls, or to classify product feedback into different categories. If you are a Python user, you can also use BigQuery Studio to run Pandas functions for text analysis.Join transcribed metadata, with other structured data stored in BigQuery tables: This allows you to combine structured and unstructured data for powerful use cases. For example, you could identify high customer lifetime value (CLTV) customers with negative support call sentiment, or shortlist the most requested product features from customer feedback.Call the PaLM API directly from BigQuery to summarize, classify, or prompt Q&A on transcribed data: PaLM is a powerful AI language model that can be used for a wide variety of natural language tasks. For example, you could use PaLM to generate summaries of support calls, or to classify customer feedback into different categories.

After transcription, you can unlock powerful search functionalities by building indexes optimized for needle-in-the-haystack queries, made possible by BigQuery’s search and indexing capabilities.

This integration also unlocks new generative LLM applications on audio files. You can use BigQuery’s powerful built-in ML functions to get further insights from the transcribed text, including ML.GENERATE_TEXT, ML.GENERATE_TEXT_EMBEDDING, ML.UNDERSTAND_TEXT, ML.TRANSLATE, etc., for various tasks like classification, sentiment analysis, entity extraction, extractive question answering, summarization, rewriting text in a different style, ad copy generation, concept ideation, embeddings and translation.

The above capabilities are now available in preview. Get started by following the documentation, demo, or contact your Google sales representative.

Read More for the details.

Cloud computing has become an essential part of many businesses and, as a Technical Account Manager, I have seen firsthand how cloud computing can help businesses save money and improve their agility. However, one of the challenges that businesses face when moving to the cloud is forecasting their cloud costs. If you are not careful, your cloud costs can quickly spiral out of control. That is where cloud cost forecasting comes in.

Cloud cost forecasting is essentially the process of predicting your future cloud usage. Forecasting is important in order to get funding, budget or support to start a new project in the cloud. Predicting your cloud costs can help you determine if you are on track with your FinOps strategy.

For many companies though, accurate cloud forecasting is one of the most difficult things to get right. In The State of Finops survey, advanced practitioners reported variances of about +/- 5% from their predictions – whilst less advanced reported variances as +/- 20%.

When I hear the word “forecasting,” I immediately think of weather predictions and, specifically, seasonality. For example, in the spring, you know that the weather will be milder than in the winter, and it is very unlikely to experience a snowstorm or a heatwave. You can predict things that are more likely to happen in spring since it is usually the rainiest season of the year.

If we apply this concept to cloud workloads, we will also discover seasons and events that will have a significant impact on cloud usage. For example, organizations may experience large spikes in demand during sales periods or year-end holidays. This will have a direct impact on consumption, which will result in additional costs. As a consequence, you will not only need to know your previous data to predict usage, but also the key factors that may influence your future consumption.

Ask yourself:

Does your business have seasonal peaks?Do you have any upcoming new projects, marketing events, or migrations planned that may result in additional users or consumption for your product?How often do you collate this data for future input into forecasting cycles?

The other key element in accurate forecasting is historical data. By analyzing your past usage, you can get a good idea of how your workloads are likely to change in the future. Historical data is key to determining costs in the future. This will help you to plan accordingly and avoid any unexpected costs. For example, if you see that your usage has been increasing steadily over the past few months as you are migrating your applications, you can expect it to continue to increase in the future. Additionally, if you see that your usage is fluctuating significantly, you may need to implement a more robust cost-management strategy.

Ask yourself:

Is my historical usage in-line with my defined seasonal peaks (if applicable?)Does my historical usage line up with expected business events?Did I appropriately investigate any forecast variations from my actuals? What were the results of variance analysis? Can I apply any lessons learned in my forecasting model?What’s my long-term trendline look like?

By understanding your historical data, you can make informed decisions about your future costs. TIP: In Google Cloud you can keep track of your consumption by exporting Cloud Billing data to BigQuery.

Now that you know your past data, and possible future events, you can start predicting your future cloud costs. There are a number of tools practitioners use to blend these two together, for example:

Your native cloud cost management tool (In GCP, that’s the GCP Billing console)Cost Estimation APIsSpreadsheetsMachine learning models using BQML or Vertex-AIThird-party forecasting tool

The best way to forecast depends on your specific needs and budget. If you have a small budget and are comfortable using spreadsheets, you can use that method. However, if you have a larger budget and need more accurate forecasts, you may want to consider using a cloud cost management tool or a third-party forecasting tool.

All things being equal, the out-of-the-box prediction feature in the Google Cloud Billing Console is a great place to start. The console provides an estimated cost for the current month based on your usage. This forecast is a combined total of actual cost to date and the projected cost trend. It is an approximation based on historical usage of all the projects that belong to that billing account. You can also adjust this forecast by filtering your report by project, service, sku, or folder in your organization.

Cloud forecasting is more manageable for a single project than for the entire organization. It is advised to forecast each project individually and then combine the forecasts to improve accuracy. The divide-and-conquer strategy is always a good approach to start small and end up with a very comprehensive forecast.

I was recently involved in a forecasting exercise for a retail customer to calculate their spending for the upcoming year. The customer did not have anything specific to predict their yearly commitment with Google Cloud for this reason we, the Google Cloud account team, worked and prepared a rough estimate of their future cloud costs.

One of the good things about this customer is that they were not new to GCP so we had several advantages to predict their costs:

We could get information from their historical dataWe knew their cloud journey and current maturity levelWe were also very familiar with their main workloadsAnd they shared with us their general plans for the following year

These were just a few advantages. On the other hand, since we didn’t know the details of each project, we couldn’t predict each project separately and combine all predictions later. As an alternative, we divided the spending and aggregated it by type of service: Compute Engine, GKE, Analytics, Networking, etc. In order to complete the estimate we followed the next steps:

Group all spending by service category (analytics, compute, networking, etc.)Calculate the average of the current and past two months’ spending for each service categoryCalculate the average of the same period from a year agoCompare the two averages to identify trends and assign each category its growth percentageFactor in all upcoming plans. Apply corrections for workloads that are stable, new, or intermittentShare the estimate with the customer and adapt it based on their feedback

The accuracy of our predictions can only be determined by the passage of time, there are always unforeseen factors that can affect the outcome of events. Only time will confirm how accurate our predictions are.

When forecasting your cloud costs, it’s important to be realistic. Don’t just assume that your costs will stay the same. Factor in changes in your cloud usage, changes in your cost drivers, and changes in the cloud market or pricing. Something that I have learned while helping my customers understand their cloud costs is that some products are used consistently, while others are used sporadically. For example, a product might be used heavily for one month and then not used at all for three months.

Lastly, cloud cost forecasting is an ongoing process that requires regular review and recalculation. Making small changes throughout the year will yield better results than waiting until the end of the year to make changes.

Now that you know how to forecast your future cloud costs, you can start tracking and monitoring your actuals versus your forecast and use variance analysis.

Read More for the details.

Want to know the latest from Google Cloud? Find it here in one handy location. Check back regularly for our newest updates, announcements, resources, events, learning opportunities, and more.

Tip: Not sure where to find what you’re looking for on the Google Cloud blog? Start here: Google Cloud blog 101: Full list of topics, links, and resources.

Announcing Launch of Cross Cloud Materialized Views: To help customers on their cross-cloud analytics journey, today we are thrilled to announce the public preview of BigQuery Omni cross-cloud materialized views (aka cross-cloud MVs). Cross-cloud MVs allow customers to very easily create a summary materialized view on GCP from base data assets available on another cloud. Cross-cloud MVs are automatically and incrementally maintained as base tables change, meaning only a minimal data transfer is necessary to keep the materialized view on GCP in sync. The result is an industry-first capability that empowers customers to perform frictionless, efficient, and cost-effective cross-cloud analytics

Google Cloud Global Cloud Service Provider of the Year. Google Cloud is thrilled to be recognized as Palo Alto Networks 2023 Global Cloud Service Provider of the Year and Global Cortex Partner of the Year. Google Cloud and Palo Alto Networks are dedicated to working together to support customer cloud journeys with an array of jointly engineered and integrated security solutions that enable digital innovation with ease. Read the Palo Alto Networks blog.GKE Enterprise edition free trial: We have announced the general availability of GKE Enterprise, the premium edition of Google Kubernetes Engine (GKE) recently. With GKE Enterprise, companies can increase velocity across multiple teams, easily and securely run their most important business-critical apps and the latest AI/ML workloads safely at scale with a fully integrated and managed solution from Google Cloud. Start the 90-day free trial today with the GKE Enterprise edition by going to the GKE console and clicking on the “Learn about GKE Enterprise” button.Assured Workloads Resource ValidationIn our new blog post on Cost Management in BigQuery, you’ll learn how to use budgets and custom quota to help you stay on top of your spending and prevent surprises on your cloud bill. The interactive tutorials linked in the article will help you set them up for your own Google Cloud projects in no time!Leverage the transformative power of generative AI to elevate your customer service. Discover how you can optimize ROI, enhance customer satisfaction, and revolutionize your contact center operations with Google’s latest conversational AI offerings in this new blog.

In the first of our new Sketchnote series on Cloud FinOps, Erik and Pathik dive into what Cloud FinOps is, and how it can help your organization optimize its cloud budget.

Join Google Cloud’s product management leadership for a Data Analytics Innovation Roadmap session on November 13th. In this session, we will go through recent innovations, strategy and plans for BigQuery, Streaming Analytics, Data Lakes, Data Integration, and GenAI. This session will give you insight into Google’s feature development and will help your team plan your data analytics strategy.Hear from Google Cloud experts on modernizing software delivery with generative AI, running AI/ML workloads on GKE, the future of AI-infused apps, and more at Digital Transform: the future of AI-powered apps, November 15th.Vertex AI Search: Read about exciting new generative AI features coming to Vertex AI Search our platform to create search based applications for your business. Vertex AI Search provides customers with a tunable Retrieval Augmented Generation (RAG) system for information discovery. Learn more in this blog.Vector similarity search: If you are looking to build an an e ecommerce recommendations engine or ad serving or other DIY application based on ANN aka vector similarity search dive into our vector search capability which is a part of the Vertex AI Search platform. We’ve expanded features and made it easier then ever for developers to get started building their apps.Cloud Deploy – Deploy hooks (GA) allow users to specify and execute pre- and post- deploy actions using Cloud Deploy. This allows customers to run infrastructure deployment, database schema updates, and other activities immediately before a deploy job, and cleanup operations as part of a post (successful) deploy job. Learn MoreCloud Deploy – Cloud Deploy now uses Skaffold 2.8 as the default Skaffold version for all target types. Learn MoreArtifact Registry – Artifact Registry remote repositories are now generally available (GA). Remote repositories store artifacts from external sources such as Docker Hub or PyPI. A remote repository acts as a proxy for the external source so that you have more control over your external dependencies. Learn MoreArtifact Registry – Artifact Registry virtual repositories are now generally available (GA). Virtual repositories act as a single access point to download, install, or deploy artifacts in the same format from one or more upstream repositories. Learn More

ABAP SDK for Google Cloud now supports 40+ more APIs, an additional authentication mechanism and enhanced developer productivity for SAP ABAP developers. Learn more in this blog post.

Our newly published Storage decision tree helps you research and select the storage services in Google Cloud that best match your specific workload needs and the accompanying blog provides an overview of the services offered for block storage, object storage, NFS and Multi-Writer file storage, SMB storage, and storage for data lakes and data warehouses.

Meet the inaugural cohort of the Google for Startups Accelerator: AI First program featuring groundbreaking businesses from eight countries across Europe and Israel using AI and ML to solve complex problems. Learn how Google Cloud empowers these startups and check out the selected ventures here.BigQuery is introducing new SQL capabilities for improved analytics flexibility, data quality and security. Some examples include schema support for Flexible column name, Authorized store proceduces, ANY_VALUE (HAVING) also known as MAX_BY and MIN_BY and many more. Check out full details here.Cloud Logging is introducing to Preview the ability to save charts from Cloud Logging’s Log Analytics to a custom dashboard in Cloud Monitoring. Viewing, copying and sharing the dashboards are supported in Preview. For more information, see Save a chart to a custom dashboard.Cloud Logging now supports customizable dashboards in its Logs Dashboard. Now you, can add your own charts to see what’s most valuable to you on the Logs Dashboard. Learn more here.Cloud Logging launches several usability features for effective troubleshooting. Learn more in this blog post.Search your logs by service name with the new option in Cloud Logging. Now you can use the Log fields to select by service which makes it easier to quickly find your Kubernetes container logs. Check out the details here.Community Security Analytics (CSA) can now be deployed via Dataform to help you analyze your Google Cloud security logs. Dataform simplifies deploying and operating CSA on BigQuery, with significant performance gains and cost savings. Learn more why and how to deploy CSA with Dataform in this blog post.Dataplex data profiling and AutoDQ are powerful new features that can help organizations to improve their data quality and build more accurate and reliable insights and models. These features and now Generally Available. Read more in this blog post.

Introducing Looker’s Machine Learning Accelerator. This easy to install extension allows business users to train, evaluate, and predict with machine learning models right in the Looker interface.Learn about how Freestar has built a super low latency, globally distributed application powered by Memorystore and the Envoy proxy. This reference walks users through the finer details of architecture and configuration, that they can easily replicate for their own needs.

You can access comprehensive and up-to-date environmental information to develop sustainability solutions and help people adapt to the impacts of climate change through Google Maps Platform’s environment APIs. The Air Quality, and Solar APIs are generally available today. Get started or learn more in this blog post.Google Cloud’s Global Partner Ecosystems & Channels team launched the Industry Value Networks (IVN) initiative at Google Cloud NEXT ’23. IVNs combine expertise and offerings from systems integrators (SIs), independent software vendors (ISVs) and content partners to create comprehensive, differentiated, repeatable, and high-value solutions that accelerate time-to-value and reduce risk for customers. To learn more about the IVN initiative, please see this blog post

You can now easily export data from Earth Engine into BigQuery with our new connector. This feature allows for improved workflows and new analyses that combine geospatial raster and tabular data. This is the first step in toward deeper interoperability between the two platforms, supporting innovations in geospatial sustainability analytics. Learn more in this blog post or join our session at Cloud Next.

You can now view your log query results as a chart in the Log Analytics page in Cloud Logging. With this new capability available in Preview, users can write a SQL filter and then use the charting configuration to build a chart. For more information, see Chart query results with Log Analytics.

You can now use Network Analyzer and Recommender API to query the IP address utilization of your GCP subnets, to identify subnets that might be full or oversized. Learn more in a dedicated blog post here.Memorystore has introduced version support for Redis 7.0. Learn more about the included features and upgrade your instance today!

Attack Path Simulation is now generally available in Security Command Center Premium. This new threat prevention capability automatically analyzes a customer’s Google Cloud environment to discover attack pathways and generate attack exposure scores to prioritize security findings. Learn more. Get started now.

Cloud Deploy has updated the UI with the ability to Create a Pipeline along with a Release. The feature is now GA. Read moreOur newly published Data & Analytics decision tree helps you select the services on Google Cloud that best match your data workloads needs, and the accompanying blog provides an overview of the services offered for data ingestion, processing, storage, governance, and orchestration.Customer expectations from the ecommerce platforms are at all time high and they now demand a seamless shopping experience across platforms, channels and devices. Establishing a secure and user-friendly login platform can make it easier for users to self-identify and help retailers gain valuable insights into customer’s buying habits. Learn more about how they can better manage customer identities to support an engaging ecommerce user experience using Google Cloud Identity Platform.Our latest Cloud Economics post just dropped, exploring how customers can benchmark their IT spending against peers to optimize investments. Comparing metrics like tech spend as a percentage of revenue and OpEx uncovers opportunities to increase efficiency and business impact. This data-driven approach is especially powerful for customers undergoing transformation.

Cloud Deploy now supports deploy parameters. With deploy parameters you can pass parameters for your release, and those values are provided to the manifest or manifests before those manifests are applied to their respective target. A typical use for this would be to apply different values to manifests for different targets in a parallel deployment. Read moreCloud Deploy is now listed among other Google Cloud services which can be configured to meet Data Residency Requirements. Read moreLog Analytics in Cloud Logging now supports most regions. Users can now upgrade buckets to use Log Analytics in Singapore, Montréal, London, Tel Aviv and Mumbai. Read more for the full list of support regions.

Cloud CDN now supports private origin authentication in GA. This capability improves security by allowing only trusted connections to access the content on your private origins and preventing users from directly accessing it.Workload Manager – Guided Deployment Automation is now available in Public Preview, with initial support for SAP solutions. Learn how to configure and deploy SAP workloads directly from a guided user interface, leveraging end-to-end automation built on Terraform and Ansible.Artifact Registry – Artifact registry now supports clean up policies now in Preview. Cleanup policies help you manage artifacts by automatically deleting artifacts that you no longer need, while keeping artifacts that you want to store. Read more

Cloud Run jobs now supports long-running jobs. A single Cloud Run jobs task can now run for up to 24 hours. Read More.How Google Cloud NAT helped strengthen Macy’s security. Read more

Cloud Deploy parallel deployment is now generally available. You can deploy to a target that’s configured to represent multiple targets, and your application is deployed to those targets concurrently. Read More.Cloud Deploy canary deployment strategy is now generally available. A canary deployment is a progressive rollout of an application that splits traffic between an already-deployed version and a new version. Read More

Google Cloud’s Managed Service for Prometheus now supports Prometheus exemplars. Exemplars provide cross-signals correlation between your metrics and your traces so you can more easily pinpoint root cause issues surfaced in your monitoring operations.Managing logs across your organization is now easier with the general availability of user-managed service accounts. You can now choose your own service account when sending logs to a log bucket in a different project.Data Engineering and Analytics Day – Join Google Cloud experts on June 29th to learn about the latest data engineering trends and innovations, participate in hands-on labs, and learn best practices of Google Cloud’s data analytics tools. You will gain a deeper understanding of how to centralize, govern, secure, streamline, analyze, and use data for advanced use cases like ML processing and generative AI.

TMI: Shifting Down, Not Left- The first post in our new modernization series, The Modernization Imperative. Here, Richard Seroter talks about the strategy of ‘shifting down’ and relying on managed services to relieve burdens on developers.Cloud Econ 101: The first in a new series on optimizing cloud tools to achieve greater return on your cloud investments. Join us biweekly as we explore ways to streamline workloads, and explore successful cases of aligning technology goals to drive business value.The Public Preview: of Frontend Mutual TLS Support on Global External HTTPS Load Balancing is now available. Now you can use Global External HTTPS Load Balancing to offload Mutual TLS authentication for your workloads. This includes client mTLS for Apigee X Northbound Traffic using Global HTTPS Load Balancer.FinOps from the field: How to build a FinOps Roadmap – In a world where cloud services have become increasingly complex, how do you take advantage of the features, but without the nasty bill shock at the end? Learn how to build your own FinOps roadmap step by step, with helpful tips and tricks from FinOps workshops Google has completed with customers.We are now offering up to $1M of financial protection to help cover the costs of undetected cryptomining attacks. This is a new program only for Security Command Center Premium customers. Security Command Center makes Google Cloud a safe place for your applications and data. Read about this new program in our blog.Global External HTTP(S) Load Balancer and Cloud CDN’s advanced traffic management using flexible pattern matching is now GA. This allows you to use wildcards anywhere in your path matcher. You can use this to customize origin routing for different types of traffic, request and response behaviors, and caching policies. In addition, you can now use results from your pattern matching to rewrite the path that is sent to the origin.Security Command Center (SCC) Premium, our built-in security and risk management solution for Google Cloud, is now generally available for self-service activation for full customer organizations. Customers can get started with SCC in just a few clicks in the Google Cloud console. There is no commitment requirement, and pricing is based on a flexible pay-as-you-go model.Dataform is Generally Available. Dataform offers an end-to-end experience to develop, version control, and deploy SQL pipelines in BigQuery. Using a single web interface, data engineers and data analysts of all skill levels can build production-grade SQL pipelines in BigQuery while following software engineering best practices such as version control with Git, CI/CD, and code lifecycle management. Learn more.

Google Cloud Deploy. The price of an active delivery pipeline is reduced. Also, single-target delivery pipelines no longer incur a charge. Underlying service charges continue to apply. See Pricing Page for more details.

Security Command Center (SCC) Premium pricing for project-level activation is now 25% lower for customers who use SCC to secure Compute Engine, GKE-Autopilot, App Engine and Cloud SQL. Please see our updated rate card. Also, we have expanded the number of finding types available for project-level Premium activations to help make your environment more secure. Learn more.Vertex AI Embeddings for Text: Grounding LLMs made easy: Many people are now starting to think about how to bring Gen AI and large language models (LLMs) to production services. You may be wondering “How to integrate LLMs or AI chatbots with existing IT systems, databases and business data?”, “We have thousands of products. How can I let LLM memorize them all precisely?”, or “How to handle the hallucination issues in AI chatbots to build a reliable service?”. Here is a quick solution: grounding with embeddings and vector search. What is grounding? What are embedding and vector search? In this post, we will learn these crucial concepts to build reliable Gen AI services for enterprise use with live demos and source code.