Editor’s note: Today, we hear from Google Fellow Amin Vahdat, who is the VP & GM for ML, Systems, and Cloud AI at Google. Amin originally delivered this as a keynote in 2023 at the University of Washington for The Allen School’s Distinguished Lecture Series. This post captures Amin’s reflections on the history of distributed computing, where we are today, and what we can expect for the next-generation of computing services.

Over the past fifty years, computing and communication have transformed society with sustained exponential growth in capacity, efficiency, and capability. Over that time, we have, as a community, delivered a 50-million-fold increase in transistor count per CPU and grown the Internet from 4 nodes to 5.39 billion.

While these advances are impressive, the human capabilities that result from these advances are even more compelling, sometimes bordering on what was previously the domain of science fiction. We now have near-instantaneous access to the evolving state of human knowledge, limited only by our ability to make sense of it. We can now perform real-time language translation, breaking down fundamental barriers to human communication. Commensurate improvements in sensing and network speeds are delivering real-time holographic projections that will begin to support meaningful interaction at a distance. This explosion in computing capability is also powering next-generation AI systems that are solving some of the hardest scientific and engineering challenges of our time, for example, predicting the 3D structure of a protein, almost instantly, down to atomic accuracy, or unlocking advanced text-to-image diffusion technology, delivering high-quality, photorealistic outputs that are consistent with a user’s prompt.

Maintaining the pace of underlying technological progress has not been easy. Every 10-15 years, we encounter fundamental challenges that require foundational inventions and breakthroughs to sustain the exponential growth of the efficiency and scale of our infrastructure, which in turn power entirely new categories of services. It is as if every factor of a thousand exposes some new fundamental, progressively more challenging limit that must be overcome and creates some transformative opportunity. We are in one of those watershed moments, a once-in-a-generation challenge and opportunity to maintain and accelerate the awe-inspiring rate of progress at a time when the underlying, seemingly insatiable demand for computing is only accelerating.

A look back on the brief history of computing suggests that we have worked through four such major transitions, each defining an ‘epoch’ of computing. We offer a historical taxonomy that points to a manifest need to define and to drive a fifth epoch of computing, one that is data-centric, declarative, outcome-oriented, software-defined, and centered on proactively bringing insights to people. While each previous epoch made the previously unimaginable routine, this fifth epoch will bring about the largest transformation thus far, promising to democratize access to knowledge and opportunity. But at the same time, it will require overcoming some of the most intrinsically difficult, and cross-stack challenges in computing.

We begin our look back at Epoch 0. Purists will correctly argue that we could look back thousands of years further, but we choose to start with some truly landmark and foundational developments in computer science that took place between 1947-1969, laying the basis for modern computing and communication.

1947: Bardeen, Brattain and Shockley invent the first working transistor.

1948: Shannon introduces Information Theory, the basis for all network communication.

1949: Stored programs in computers become operational.

1956: High-level programming languages are invented.

1964: Instruction Set Architectures, common across different hardware generations, emerge.

1965: Moore’s Law introduced, positing that transistor count per integrated circuit will double every 18-24 months.

1967: Multi-user operating systems provide protected sharing of resources.

1969: Introduction of the ARPANet, the basis for the modern Internet.

These breakthroughs became the basis for modern computing at the end of Epoch 0: four computers based on integrated circuits running stable instruction set architectures and a multi-user, time-shared operating system connected to a packet-switched internet. This seemingly humble baseline laid the foundation for exponential progress in subsequent epochs.

In the first Epoch, computer networks were largely used in an asynchronous manner: transfer data across the network (e.g., via FTP), operate on it, and then transfer results back.

Notable developments: SQL, FTP, email, and Telnet

Interaction time among computers: 100 milliseconds

Characteristics:

• Low-bandwidth, high-latency networks

• Rare pairwise interactions between expensive computers

• Character keystroke interactions with humans

• The emergence of open source software

Breakthrough: Personal computers

Aided by increasing network speeds, prevalence of personal computers/workstations, and widespread, interoperable protocols (IP, TCP, NFS, HTTP), synchronous, transparent computation and communication became widespread in Epoch 2.

Notable developments: Remote Procedure Call, client/server computing, LANs, leader election and consensus

Interaction time among computers: 10 milliseconds

Characteristics:

• 10 Mbps networks

• Internet Architecture scales globally thanks to TCP/IP

• Full 32-bit CPU fits on a chip

• Shared resources between multiple computers

Breakthrough: The World Wide Web

In Epoch 3, the true breakthrough of HTTP and the World Wide Web brought network computing to the masses, breaking the confines of personal computing. To keep pace with continued exponential growth in the Internet and the needs of a global user population, many of the design patterns of modern computing were established during this period.

One of the drivers of Epoch 3 was the end of Dennard scaling, which essentially limited the maximum clock frequency of a single CPU core. This limitation led the industry to adopt multi-core architectures, necessitating a move toward asynchronous, multi-threaded, and concurrent development environments.

Notable developments: HTTP, three-tier services, massive clusters, web search

Interaction time among computers: 1 millisecond

Characteristics:

• 100 Mbps–1Gbs networks

• Autonomous Systems / BGP

• Complex apps no longer fit on a single server; scaling to many servers

• Web indexing and search, population-scale email

Breakthrough: Cluster-based Internet services, mobile-first design, multithreading and instruction-level parallelism

Epoch 4 established planetary-scale services available to billions of people through ubiquitous cellular devices. In parallel, a renaissance in machine learning drove more real-time control and insights. All of this was powered by warehouse-scale clusters of commodity computers interconnected by high-speed networks, which together processed vast datasets in real-time.

Notable developments: Global cellular data coverage, planet-scale services, ubiquitous video

Interaction time among computers: 100 microseconds

Characteristics:

• 10-100 Gbps networks, flash

• Multiple cores per CPU socket

• Infrastructure that scales out across LANs (e.g., GFS, MapReduce, Hadoop)

• Mobile apps, global cellular data coverage

Breakthroughs: Mainstream machine learning, readily available specialized compute hardware, cloud computing.

Today, we have transitioned to the fifth Epoch, which is marked by a superposition of two opposing trends. First, while transistor count per ASIC continues to increase at exponential rates, clock rates are flat and the cost of each transistor is now nearly flat, both limited by the increasing complexity and investment required to achieve smaller feature sizes. The implication is that performance normalized to cost improvements, or performance efficiency, of all of compute, DRAM, storage, and network infrastructure, is flattening. At the same time, ubiquitous network coverage, broadly deployed sensors, and data-hungry machine learning applications are accelerating the demand for raw computing infrastructure exponentially.

Notable developments: Machine learning, generative AI, privacy, sustainability, societal infrastructure

Interaction time among computers: 10 microseconds

Featuring:

• 200Gbps–1+Tb/s networks

• Ubiquitous, power-efficient, and high-speed wireless network coverage

• Increasingly specialized accelerators: TPUs, GPUs, Smart NICs

• Socket-level fabrics, optics, federated architectures

• Connected spaces, vehicles, appliances, wearables, etc…

Breakthroughs: Many coming…

Without fundamental breakthroughs in computing design and organization, our ability as a community to meet societal demands for computing infrastructure will falter. Coming up with new architectures to overcome these limitations, new hardware and increasingly, software architectures, will define the fifth epoch of computing.

While we cannot predict the breakthroughs that will be delivered in this fifth epoch of computing, we do know that each previous epoch has been characterized by a factor of 100x improvement in scale, efficiency, and cost-performance, all while improving security and reliability. The demand for scale and capability is only increasing, so delivering such gains without the tailwinds of Moore’s Law and Dennard scaling at our backs will be daunting. We imagine, however, the broad strokes will involve:

Declarative programming models: The Von Neumann model of sequential code execution on a dedicated processor has been incredibly useful for developers for decades. However, the rise of distributed and multi-threaded computing has broken the abstraction to the point where much of modern imperative code focuses on defensive, and often inefficient, constructs to manage asynchrony, heterogeneity, tail latency, optimistic concurrency, and failures. Complexity will only increase in the years ahead, essentially requiring new declarative programming models focused on intent, the user, and business logic. At the same time, managing execution flow and responding to shifting deployment conditions will need to be delegated to increasingly sophisticated compilers and ML-powered runtimes.

Hardware segmentation: In earlier epochs, a general-purpose server architecture with a system balance of CPU, memory, storage, and networking could efficiently meet workload needs throughout the data center. However, when designing for specialized computing needs, ML training, inference, video processing, the conflicting requirements for storage, memory capacity, latency, bandwidth and communication is causing a proliferation of heterogeneous designs. When general-purpose compute performance was improving at 1.5x/year, pursuing even a 5x improvement for 10% of workloads did not make sense given the complexity. Today, such improvements can no longer be ignored. Addressing this gap will require new approaches to designing, verifying, qualifying, and deploying composable hardware ASICs and memory units in months, not years.

Software-defined infrastructure: As underlying infrastructure has become more complex and more distributed, multiple layers of virtualization from memory to CPU have maintained the single server abstraction for individual applications. This trend will continue in the coming epoch as infrastructure continues to scale out and become more heterogeneous. The corollary of hardware segmentation, declarative programming models and distributed computing environments comprised of thousands of servers, will stretch virtualization beyond the confines of individual servers to include distributed computing on a single server, multiple servers, storage/memory arrays, and clusters — in some cases bringing resources across an entire campus together to efficiently deliver end results.

Provably secure computation: In the last epoch, the need to sustain compute efficiency inadvertently came at the cost of security and reliability. However, as our lives move increasingly online, the need for privacy and confidentiality increases exponentially for individuals, for business, and governments. Data sovereignty, or the need to restrict the physical location of data, even derived, will become increasingly important to adhere to government policies, but also to transparently show the lineage of increasingly ML-generated content. Despite some cost in baseline performance, these needs must be first-class requirements and constraints.

Sustainability: The first three epochs of computing delivered exponential improvements in performance for fixed power. With the end of Dennard scaling in the fourth epoch, global power consumption associated with computing has grown quickly, partially offset by the move to cloud-hosted infrastructure, which is 2-3x more power-efficient relative to earlier, on-premises designs. Further, cloud providers have made broad commitments to move to first carbon-neutral and then carbon-free power sources. However, the demand for data and compute will continue to grow and even likely accelerate in the fifth epoch. This will turn power-efficiency and carbon emissions into primary systems-evaluation metrics. Of particular note, embodied carbon over the entire lifecycle of infrastructure build and delivery will require both improved visibility and optimization.

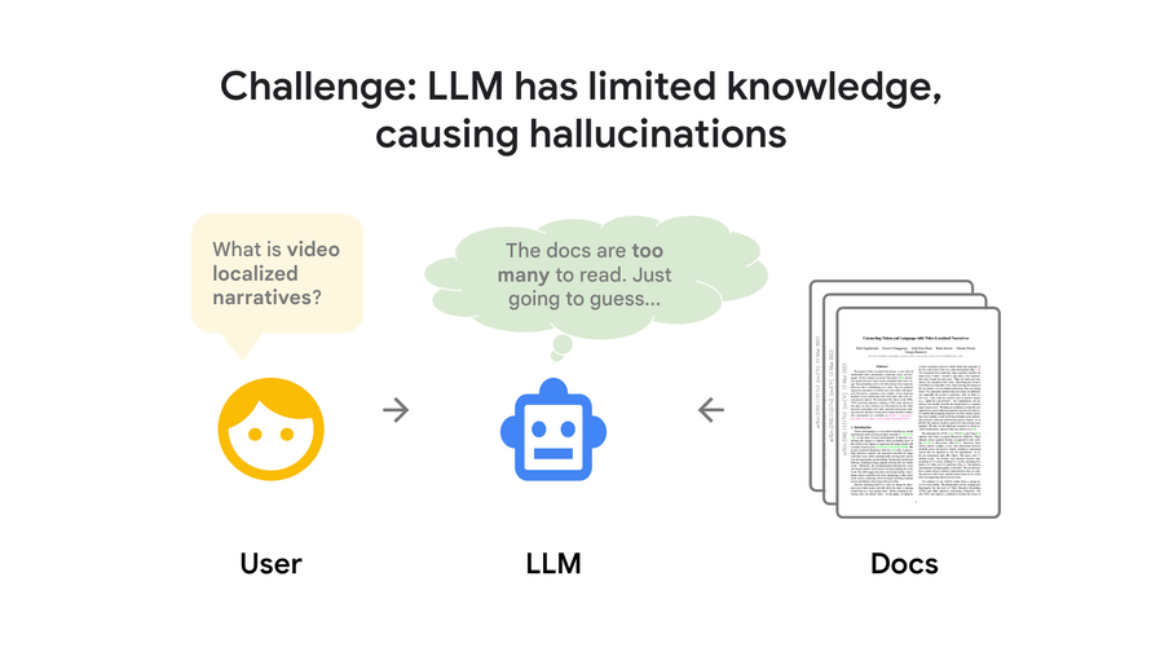

Algorithmic innovation: The tailwinds of exponentially increasing performance have allowed software efficiency improvements to often go neglected. As improvement in underlying hardware components slows, the focus will turn to software and algorithmic opportunities. Studies indicate that opportunities for 2-10x improvement in software optimization abound in systems code. Efficiently identifying these software optimization opportunities and developing techniques to gracefully and reliably deliver these benefits to production systems at scale will be a critical opportunity. Leveraging recent breakthroughs in coding LLMs to partially automate this work would be a significant accelerant in the fifth epoch.

Integrating across the above, the fifth epoch will be ruled by measures of overall user-system efficiency (useful answers per second) rather than lower-level per-component measures such as cost per MIPS, cost per GB of DRAM, cost per Gb/s, etc. Further, the units of efficiency will not be simply measured in performance-per-unit-cost but will explicitly account for power consumption and carbon emissions, and will take security and privacy as primary metrics, all while enforcing reliability requirements for the infrastructure on which society increasingly depends. Taken together, there are many untapped opportunities to deliver the next generation of infrastructure:

A greater than 10x opportunity in scale-out efficiency of our distributed infrastructure across hardware and software.

Another 10x opportunity in matching application balance points — that is, the ratio between different system resources such as compute, accelerators, memory, storage, and network — through software-defined infrastructure.

A more than 10x opportunity in next-generation accelerators and segment-specific hardware components relative to traditional one-size-fits-all, general-purpose computing architectures.

Finally, there is a hard-to-quantify but absolutely critical opportunity to improve developer productivity while simultaneously delivering substantially improved reliability and security.

Combining these trends, we are on the cusp of yet another dramatic 1000x efficiency gain over the next epoch that will define the next generation of infrastructure services and enable the next generation of computing services, likely centering around breakthroughs in multimodal models and generative AI. The opportunity to define, design, and deploy what computing means for the next generation does not come along very often, and the tectonic shifts in this fifth epoch promise perhaps the biggest technical transformations and challenges to date, requiring a level of responsibility, collaboration and vision perhaps not seen since the earliest days of computing.

for the details.