Platform engineering, rooted in DevOps principles, streamlines developer experience and boosts productivity through automated infrastructure and self-service tools. In this blog, we’ll talk about how Google Kubernetes Engine (GKE) enables you to build a secure, scalable internal developer platform in Google Cloud for fast and reliable application delivery.

Adopting a modern architecture with cloud-first infrastructure

In today’s world of modern technology, staying ahead means being agile. Businesses that innovate rapidly and deliver reliably hold the key to success. Adopting a modern architecture with these key elements will power your software delivery process:

Modular systems: A good example of a modular system is a microservice-based application. Instead of one large, monolithic structure, a microservice-based application breaks down into a collection of smaller, independent services, enabling agile delivery and faster rollout of innovation.Containerized applications on Kubernetes (K8s): Containers offer a lightweight method to package and deploy applications, facilitating seamless movement across various environments. K8s platforms, such as GKE, establish a robust foundation for platform engineering through declarative configuration and automation.Modern databases at the core: Modern databases are designed to handle the massive amounts of data that businesses generate today. They offer high performance, scalability, and reliability, helping to unlock the insights hidden in your data.

Embracing a modern architecture can help you attain the agility, scalability, and security essential for success in the digital era. Outcomes are measured through key metrics, such as deployment frequency, lead time for changes, mean time to restore, and change failure rate.

Deploying an enterprise developer platform on GKE

Platform engineering centers around creating and managing internal developer platforms (IDP) for your organization. GKE, through its deep integration with the Google Cloud ecosystem, serves as an ideal foundation for an IDP. Let’s explore how GKE empowers you to build a robust platform that supports your developers.

Establish a scalable, developer-friendly Kubernetes foundation

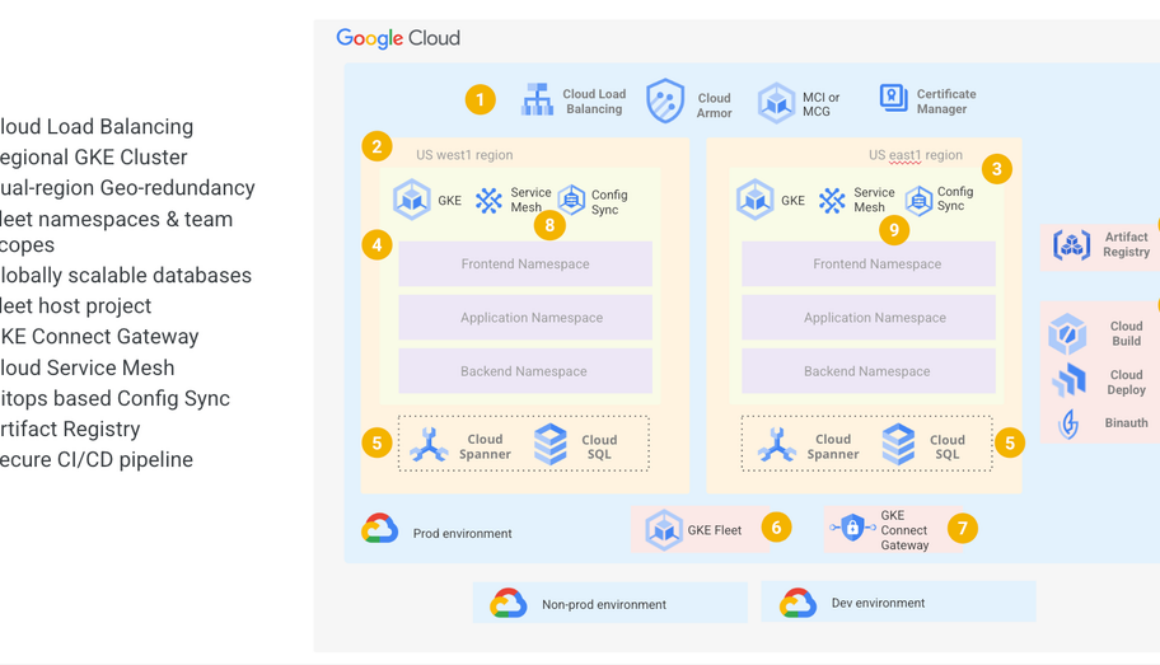

Many organizations manage distributed locations and support multiple applications and teams. For a platform built to scale from the start, consider GKE Fleet. This management layer allows you to operate multiple Kubernetes clusters from a centralized control plane. You can efficiently manage distributed clusters with feature deployment, upgrades, and monitoring while ensuring consistency across your environments. By adding a multi-cluster Gateway and multi-cluster Service to your fleet, you can simplify cross-cluster communications, traffic management, and high availability for microservice-based applications.

Fleet-based team management also establishes logical partitions to create a multi-tenant model that aligns with your organization. The balance of collaboration and isolation can be easily achieved as developers get autonomy within their assigned scope (e.g., designated clusters, namespaces and roles), while platform engineers benefit from the centralized oversight and tooling.

Implementing GitOps-based Kubernetes management tools like Config Sync can drive automation, operational efficiency, and consistency. The GitOps workflow offers advantages like version control for tracking changes, effortless rollbacks to mitigate issues, and proactive configuration drift prevention. In addition, Config Sync can help you maintain configuration integrity and standardization across multiple clusters. Furthermore, Config Sync’s multi-repository support enables you to delegate specific configuration aspects to individual application teams, simplifying management in complex environments.

With a robust foundation that automates repetitive cluster management tasks, platform engineers gain the freedom to focus on high-value initiatives. These include developing self-service infrastructure solutions and optimizing CI/CD pipelines for applications across the fleet.

Implement a zero-trust system architecture

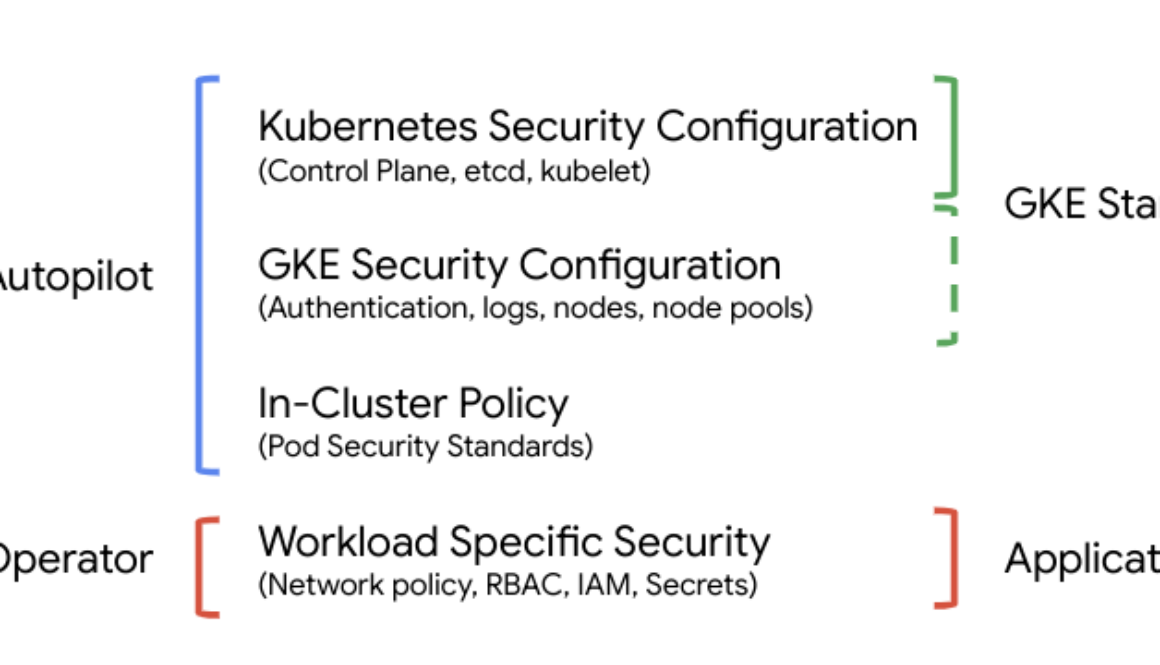

Platform engineers need to embrace a zero-trust mindset. Zero trust operates under the fundamental principle that no user, device, or network traffic should be inherently trusted. Therefore, each interaction attempt requires continuous authentication and authorization . As more applications adopt a microservices-based architecture, complexity and attack surface increases. In addition, traditional perimeter-based security models break down with the growing popularity of hybrid and remote working. GKE provides robust features to support the implementation of a zero-trust security strategy.

For microservices, Istio-based Anthos Service Mesh and Policy Controller combine to deliver strong, identity-based security. With mutual TLS and microservice segmentation at scale, you can establish a layered defense across workloads and clusters. Centralized policy enforcement ensures consistency, while audit logging enhances compliance. This comprehensive approach not only aligns with zero-trust principles but also enforces continuous authentication and authorization, alongside fine-grained security controls and least-privilege access for your services.

The GKE Security Posture dashboard offers continuous visibility, actionable insights, trend analysis and compliance monitoring for your infrastructure and workloads, providing a centralized dashboard with clear remediate guidance. It automatically scans your GKE clusters to identify potential misconfigurations, vulnerabilities, and policy violations across workloads and nodes. You can also scan workloads in the runtime to mitigate OS and language package vulnerability risk. In addition, GKE Policy Controller, which is based on the open-source Open Policy Agent Gatekeeper project, comes with 14 out-of-the-box policy bundles for common compliance and security controls. It assists you in adhering to security standards and best practices (e.g., CIS benchmarks) by providing proactive enforcement of policies and preventative guardrails across your clusters.

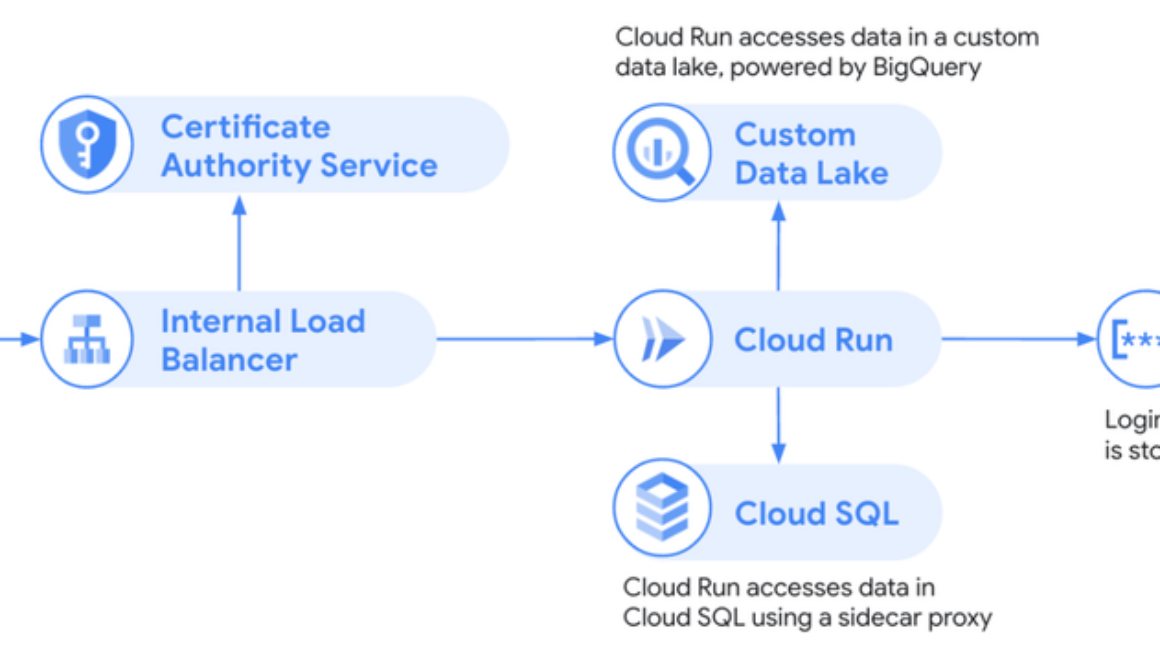

Platform engineering empowers developers by streamlining the software development lifecycle (SDLC), fostering rapid and secure software delivery. Google Cloud offers a robust suite of products and best practices to safeguard every stage of your development process. GKE’s tight integration with Cloud Build, Cloud Deploy, Cloud Armor, Secret Manager, and other Google Cloud services provides a comprehensive framework for ensuring supply chain integrity from code to production.

Embed cloud FinOps for core platform efficiency

Enterprises turn to cloud FinOps to tackle unpredictable cloud costs and better align tech teams with financial objectives. While cost savings are a major motivator, FinOps also prioritizes speed, enabling developers to innovate within established financial controls. Platform engineers can leverage GKE features to optimize costs from the outset. Workload rightsizing, demand-based downscaling, efficient cluster bin packing, and maximizing discount coverage are key things you can focus on for Kubernetes cost optimization.

For cost visibility, proper labeling of GKE resources is crucial for fine-grained cost tracking against projects, teams, or even specific applications. With GKE fleet and team scopes, you can label GKE resources automatically for cost transparency. GKE’s powerful 4-way autoscaling delivers direct cost savings by ensuring you only pay for the capacity you truly need. Additionally, creating platform policies that leverage Spot VMs for less critical workloads, can significantly reduce your cloud bill.

FinOps isn’t about setting it and forgetting it. It’s an ongoing journey of monitoring, reporting, and right-sizing to ensure your cloud investments stay healthy. With GKE, you’re not left in the dark after launch. It provides right-sizing recommendations to optimize your clusters and workloads, plus centralized views of resource usage across your fleet and teams. Through continuous optimization, you’ll build a developer platform that’s both efficient and scalable for the long term. FinOps simply gives you the guardrails and processes to make this growth sustainable, keeping you on track with your financial goals.

Build globally scalable workloads with managed database

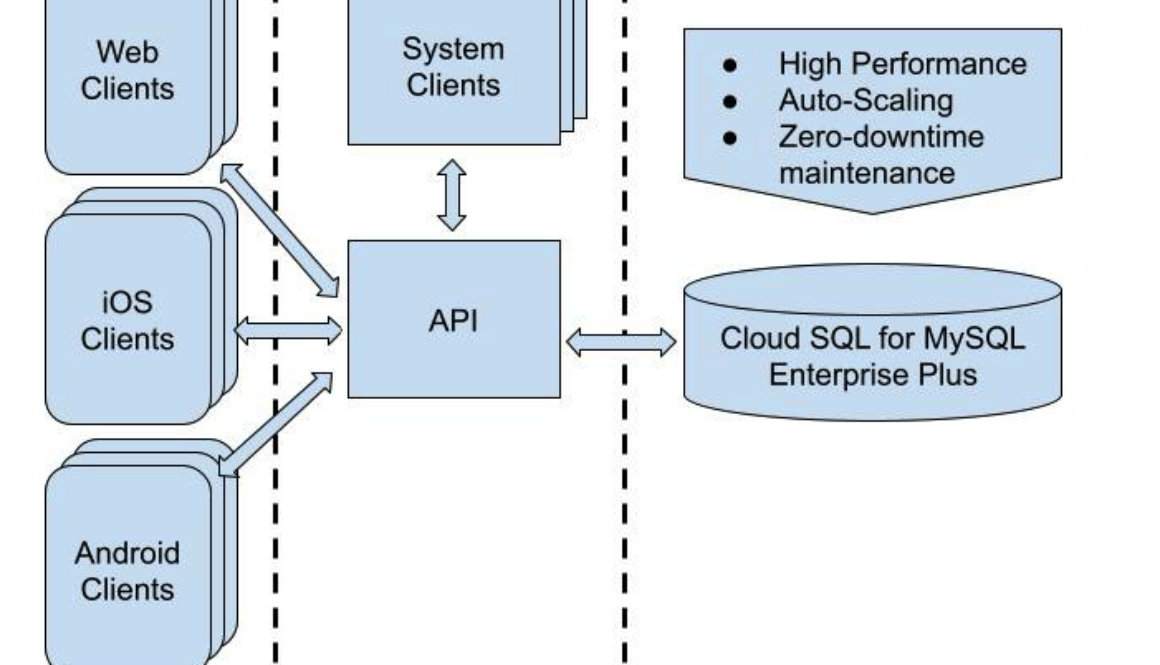

Containers and databases form the foundation of cloud-native workloads like serverless web apps, real-time fraud detection, gen AI use cases, and more. GKE simplifies your technology stack, offering integration with various Google Cloud database options – AlloyDB, Spanner, Firestore, Bigtable, and others. This allows you to choose the ideal database for your application’s needs while enjoying streamlined operations with GKE, including automated scaling, high availability, and backups.

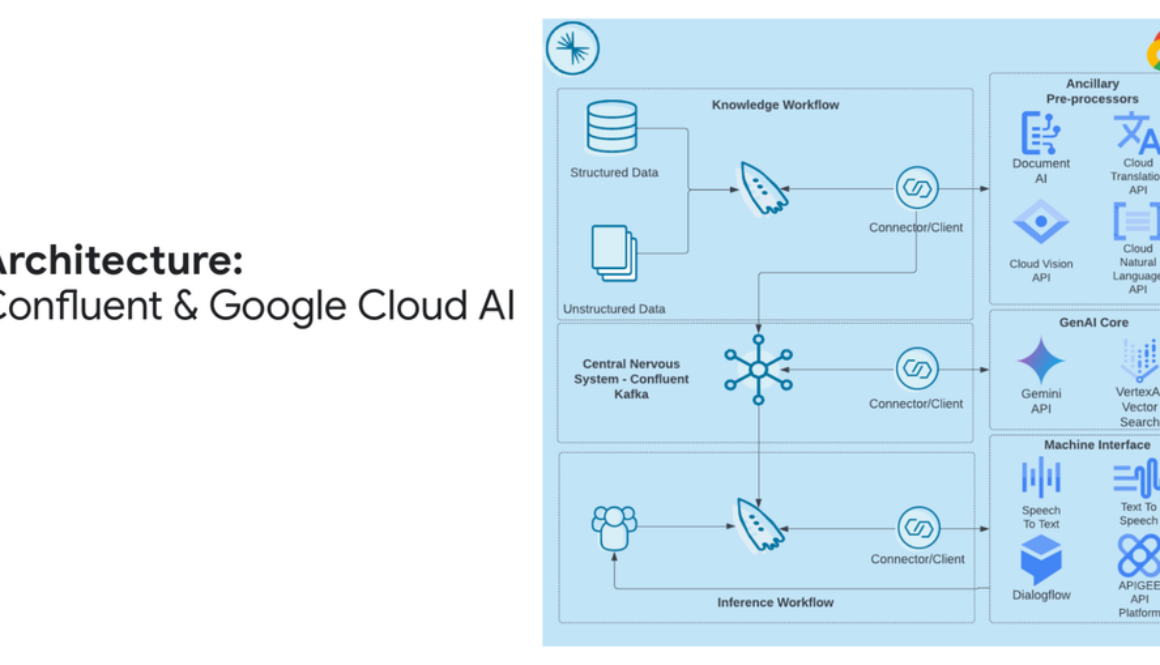

Beyond what we’ve discussed, several other crucial elements contribute to a successful internal developer platform. This includes robust application CI/CD pipelines, comprehensive logging, and monitoring solutions. To visualize how these pieces fit together, take a look at Figure 1 below. It illustrates the key components for building a highly available, multi-region IDP within the Google Cloud ecosystem.

for the details.